Introduction

Disinformation and misinformation on YouTube remain topics of considerable concern, especially in the context of elections. Despite the platform’s commitment to addressing online harms surrounding the 2024 EP elections, a report by Maldita found that YouTube was the least effective social media platform in addressing reported misinformation (with 75 per cent of reported cases going unaddressed). The European Digital Media Observatory (EDMO)’s Task Force on the 2024 European Parliament Elections identified climate change, immigration, and the war in Ukraine as three major topics around which harmful narratives spread online during the campaign. This brief investigates the presence, discourse around, and sources of these narratives on YouTube in the lead up to and directly after the elections.

To identify videos containing these narratives, we constructed queries based on terms associated with disinformation claims related to these topics that have been previously debunked by fact-checking organisations such as EDMO. Using these queries, we identified 596 unique videos that potentially contained disinformation that were uploaded between 1 April and 30 June 2024. These videos, and their comment sections and parent channels were then analysed to identify trends and prominent topics. To identify these videos, we used an approach based on a combination of YouTube’s API search function and a third-party co-commenting analysis tool provided by the Digital Methods Initiative from the University of Amsterdam. While instances of specific and exact false and debunked claims may be identified through closer fact checking, this analysis seeks instead to outline the trends in and prominence of content returned by queries related to disinformation narratives across the YouTube media ecosystem during the EP elections campaign. This process is meant to mimic the experience of an average user who searches for content related to these narratives, capturing the kinds of videos and information they might encounter within the YouTube media ecosystem.

Key Findings

- Of the three major topics in this analysis, queries relating to the ongoing war in Ukraine returned the highest number of videos. Over 77 per cent of the videos in the dataset were related to narratives about the conflict. Seventeen per cent of the videos were related to immigration narratives, and six per cent were related to climate. Most of these videos were in English.

- The videos identified in this analysis were uploaded by 131 unique channels, which ranged from small and anonymous content creators to the official platforms of conspiratorial media personalities such as Tucker Carlson and Russell Brand. Many of these large creators interviewed each other, and were featured across several channels.

- The most prominent topics in videos covering the war in Ukraine were speculation about Ukrainian casualties and the imminent collapse of the Ukrainian Armed Forces, as well as alleged efforts by NATO to escalate the conflict to a nuclear exchange. Also featured were claims that Ukraine was actively persecuting Christians in an effort to destroy non-Orthodox Christianity, claims of an active NATO presence in Ukraine, and claims that the United States and Ukraine were behind the March terrorist attacks in Moscow.

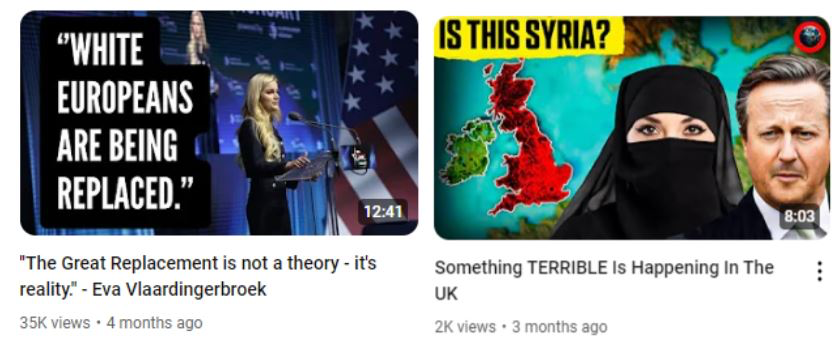

- Videos relating to immigration often alleged that migrants were taking power in European countries, entertained conspiracy theories such as the “great replacement”, and depicted migration and demographic changes in Europe as a war between the West and Islam.

- Videos relating to climate change were few in the dataset, but those that appeared covered topics such as chemtrails or claims that global warming was a myth and an excuse for governments and elites to seize land and power.

- Comments frequently reflected the rhetoric present in the videos, with users echoing conspiratorial narratives, thanking the hosts for speaking “the truth”, or quoting from scripture. For videos relating to immigration, users frequently invoked nationalist and xenophobic slogans, such as “send them back” and “Europe for Europeans”.

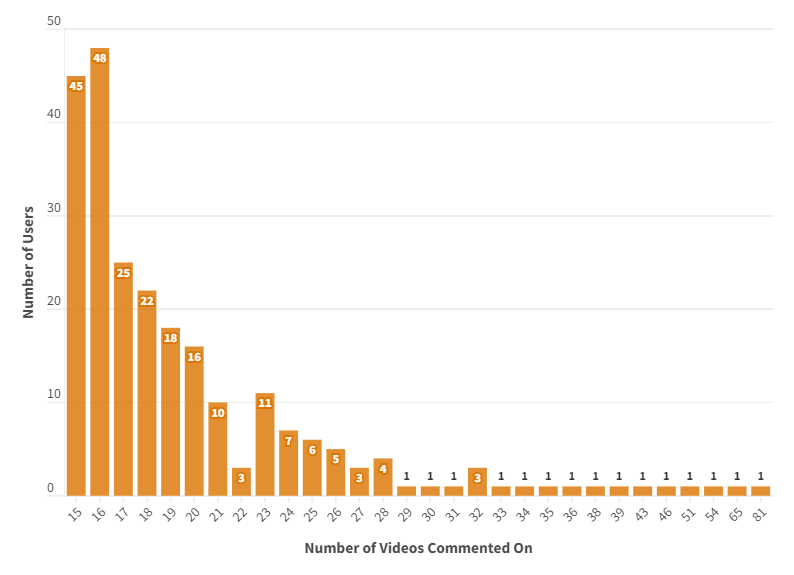

- We observed high rates of co-commenting across videos in the dataset. Several hundred users commented on 15 or more of the identified videos, often those relating to Ukraine, indicating that the videos identified are watched by a “community” of users. Our data also shows that some of these users may possibly be inauthentic.

Analysis

Video Analysis

Ukraine

Figure 1. Topic Analysis of Videos Covering the War in Ukraine

The figure above displays the major identifiable topics in the videos relating to Ukraine in the dataset that had available transcripts. These transcripts were summarised and grouped by topic, using natural-language-processing techniques.

For Ukraine, some of the most dominant topics observed in the dataset were updates and commentary on the evolving front line, often in favour of Russia. Queries returned videos featuring predictions of the imminent collapse of the Ukrainian Armed Forces, and of Ukrainian President Volodymyr Zelensky “being finished” and preparing to flee the country (“Putin’s Men Wreak Havoc In Ukraine; Wipe Out Scores Of Ukrainians Troops & NATO Weapons”). Other videos portrayed Zelensky as being corrupt (“Zelensky Looting Western Aid? NATO Nation’s Leader Fumes At ‘Most Corrupt Nation’ Ukraine”), or being directly responsible for the continuation of the conflict (“’We WILL be at war for 10 years if this happens.” Zelensky just admitted the truth”).

Equally prevalent were videos depicting NATO as aggressive, expansionist, and seeking to escalate the conflict and provoke a nuclear war with Russia. Video titles in these categories ranged from celebratory (“Ukraine’s Army is being DECIMATED as Russia Prepares Finishing Blow to NATO”) to alarmist (“⚡ALERT: NATO PLACES NUCLEAR WEAPONS ON STANDBY, EMERGENCY MEETING OVER ANOTHER NUKE SUB NEAR UK”), frequently interpreting statements by Russia or NATO members as preludes to a greater escalation. United States and EU aid to Ukraine was also the subject of much debate, with hosts insisting that elite capture and government corruption were the primary reason Western governments’ support Ukraine (“‘Looters’: U.S Internet Rages Over $61B Aid To Ukraine; Memefest Erupts”).

Videos also often referenced escalating tensions involving North Korea, China, or Israel as proof of imminent nuclear conflict (“Israel Crossed Iran’s Red Line and TOTAL WAR is Coming”) or provided propagandistic analysis of the ongoing conflict in the Middle East (“Scott Ritter: Israel is LOSING this War and Iran will Destroy the IDF on All Fronts”). In addition, several videos that covered the war in Ukraine also discussed immigration, particularly in Europe and the United States, portraying government migration policy as a failure and responsible for criminal attacks.

Other videos included allegations that Ukraine and the EU were behind the March terrorist attack in Moscow (“Russia Finds ‘More Proof’ Against Ukraine Over Role In Moscow ISIS Attack; ‘Explosives Sent Via EU‘”) and that Ukraine’s Armed Forces have been so depleted that they are relying on EU mercenaries (“Ukraine Gets ‘Fighters From France, Poland’ as Russia Set To Capture Kharkiv”).

Also highly prominent in the dataset was commentary by Scott Ritter, a former US intelligence officer and ex-UN weapons inspector turned political contributor for Kremlin-backed media outlets Russia Today, Sputnik, and Channel One. Ritter has close ties to Moscow and, in August, had his residence raided by the FBI. Ritter’s commentary on the war in Ukraine featured heavily in the videos collected for this research, often appearing across several channels to give remote interviews (e.g., “Scott Ritter: Russia has DESTROYED NATO’s Military Strategy and Europe is Falling Apart” and “Scott Ritter: Ukraine is FINISHED After Making This Move and NATO is Terrified”). Such videos included estimates that Ukraine had lost over “one million men” so far, and that US and EU troops were fighting directly in the conflict as mercenaries. In June, Ritter had his passport seized while trying to leave the United States for a speaking event in Russia. Several videos in the dataset, many of which feature Ritter himself, insist that this was an attempt by the US government to censor the truth (“PASSPORT SEIZED! Scott Ritter Taken Off Airplane While Traveling To Russia”).

Immigration

Immigration was the second-most prominent topic in the dataset, with 104 total videos identified within the period of analysis. Many of these videos covered instances of crime committed by migrants or people with an immigrant background (“How Ireland is Devastated by its Immigration Crisis”), claimed that migrants were taking power in Europe (“Migrants Are TAKING OVER This Spanish City”), or supported the “great replacement” conspiracy theory (‘”The Great Replacement is not a theory – it’s reality.” – Eva Vlaardingerbroek’). Disinformation videos relating to migration covered a broad range of national contexts, from the United Kingdom and Ireland to Germany, Poland, and Sweden.

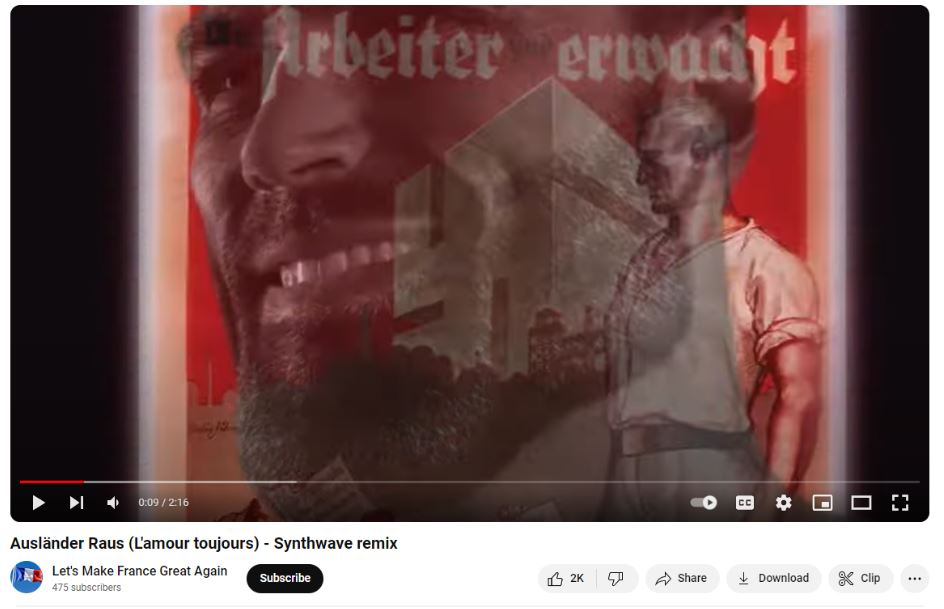

Also identified in the queries were several music video remixes of the song “L’Amour Toujours”, which has become a popular anthem among the far-right in Europe since its repurposing with the lyrics “Ausländer raus” (“foreigners out”). Such remixes were often posted by small channels with few subscribers, yet generated outsized engagement.

Above: A screenshot from a music video posted by “Let’s Make France Great Again” that features Nazi symbolism and propaganda. Despite the channel having only a few hundred subscribers, the video has been viewed over 44,000 times.

Climate

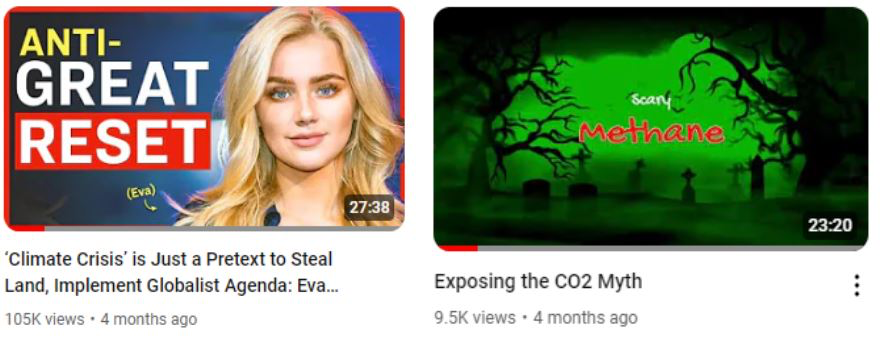

Queries relating to climate disinformation returned the lowest number of videos. Several videos identified repeated familiar narratives, such as chemtrails (“Chemtrails, or the sky is falling…”), or that the issue of climate change is a plot for globalist elites to take greater power (“‘Climate Crisis’ is Just a Pretext to Steal Land, Implement Globalist Agenda: Eva Vlaardingerbroek” and “Tucker Exposes Klaus Schwab and the Ruling Elite”).

Channel Analysis

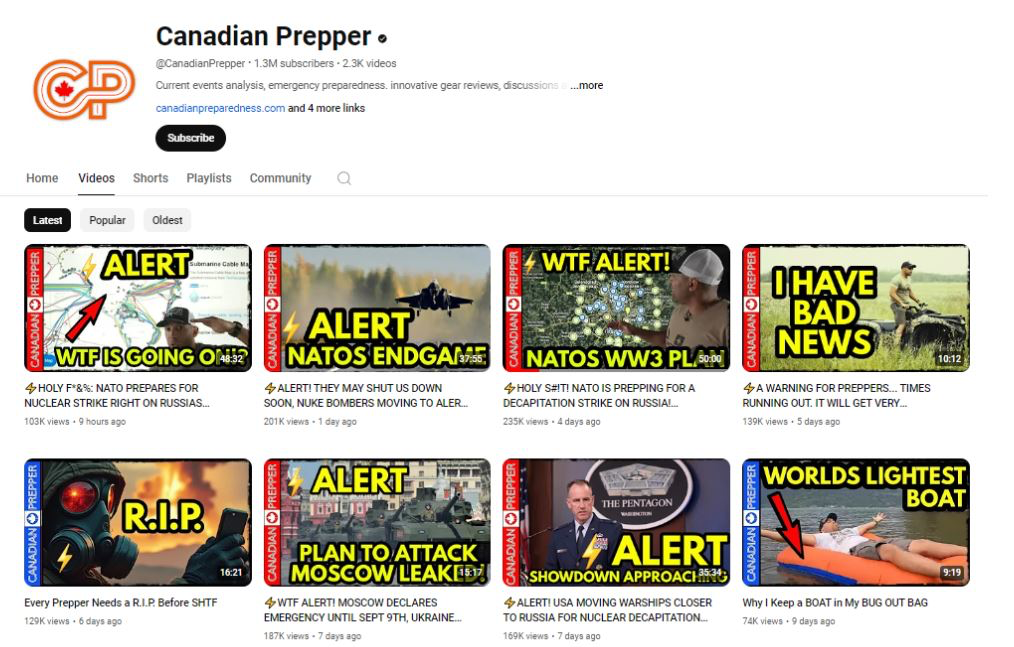

The videos identified in this research can be traced back to 131 channels. Some, such as those of Tucker Carlson or Russell Brand, are the official outlets of prominent commentators, with millions of subscribers and high levels of engagement on each video. Other channels were much smaller, with only several hundred subscribers. Several channels were self-described members of the “prepper community”, individuals who stockpile weapons, food, and survival kits in preparation for societal collapse. Videos on these channels were often highly alarmist in nature, referring to ongoing geopolitical conflicts in Ukraine and the Middle East as preludes to nuclear war, while also advertising their own survivalist products to subscribers (“⚡ALERT: 90,000 NATO TROOPS NUCLEAR DRILL, 4 HORSEMAN! IRAN AND NORTH KOREA ICBMS, DROUGHT, MEGAFIRES”).

Commentary by US far-right political commentator Tucker Carlson was also prevalent in the Ukraine dataset (“Leftist Reporter Challenges Tucker On Putin and Covid, Instantly Regrets It”, “McConnell SHANKS Tucker Carlson For Ukraine Aid Skepticism”). Videos on Carlson’s channel are often multiple hours long and cover several topics, including immigration. Carlson frequently equates Western aid for Ukraine with “tempting World War III” and supporting a “Nazi regime” (“Dave Smith: Russia, Israel, Trump & the Swamp, Obama, and the Media Attacks on Joe Rogan”). In one video, which received over 400,000 views, Carlson claims that Ukraine is “one of the most repressive countries in the world” and is specifically persecuting non-Orthodox Christians (“Exposing Ukraine’s Secret Police and Mission to Exterminate Christianity”).

The channel ”Canadian Prepper” has over 1.3 million subscribers, and posts almost daily about imminent nuclear war and the necessity of buying its sponsored content.

Two channels that appeared frequently in the dataset were the Indian news outlets the Hindustan Times and Times of India, with 63 and 33 videos identified, respectively. These channels frequently covered the war in Ukraine in favour of Russia, often sharing footage and coverage directly from Kremlin-backed media outlets such as Russia Today (“Putin’s Team Arrest The Man Who Recruited Moscow Terrorists & 3 Others” ). While videos from these outlets may avoid directly engaging with disinformation narratives, their coverage and headlines often imply falsehoods, such as direct EU military involvement in Ukraine, and the CIA and Ukraine being behind the Moscow terrorist attacks (“NATO ‘Exposed’ By Its Own Member; ‘”).

By cutting off the quote by a Russian official, the headline above implies the United States was directly behind the terrorist attack.

Several “pseudo-media” sites – outlets that do not abide by professional journalistic standards – were also present in the dataset. One such organisation was Tenet Media, recently removed from YouTube after being implicated in an indictment by the US State Department for receiving $10 million from individuals linked to the Russian government.7 Tenet Media hosted several American far-right political commentators, such as Tim Pool and David Rubin, and frequently trafficked in conspiracies around immigration and the war in Ukraine8 (one video by Tenet Media was titled “The Great Replacement: Can We Finally Talk About It? | Lauren Southern”. The video has since been taken down). Another prominent pseudo media channel is Redacted News, which is co-hosted by former FOX News anchor Chris Morris and former MSNBC reporter Natali Morris, and has over 2.4 million subscribers. Videos by Redacted News often refer to a ”deep state group of elites” manipulating world events, and their coverage of the war in Ukraine is ostensibly pro-Russian (“WARNINGS! “Ukraine will COLLAPSE in 2 weeks!” as Putin readies massive offensive”, “It’s OVER for Ukraine and Zelensky can’t hide it ANYMORE”).

Also present in the dataset were several smaller channels, with subscriber counts in the low thousands, or less. Videos on these channels included the previously mentioned remixes of “L’amour Toujours”.

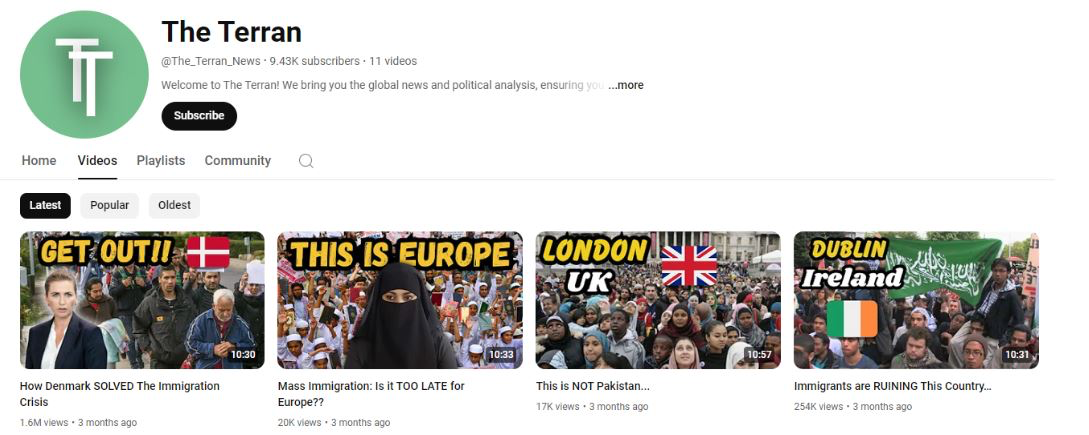

The channel ”The Terran” claims to offer ”global news and political analysis”, but focuses purely on anti-immigrant content.

As stated at the outset, this analysis does not attempt to fact-check the identified content. However, the frequently conspiratorial, alarmist and biased nature of much of this content, as revealed by qualitative reviews and natural-language-aggregation of transcripts, reveals that many of the videos identified can, at the very least, be described as associated with disinformation narratives.

Comment Analysis

For each topic, the comments from all videos were extracted and analysed.

Immigration

The comments section in videos relating to immigration heavily featured extreme nationalist sloganeering and discussions of immigration in various national contexts, from Germany to Ireland to Sweden. Users entertained popular conspiracy theories, such as the “great replacement”, or openly expressed a desire to deport all foreigners from Europe.

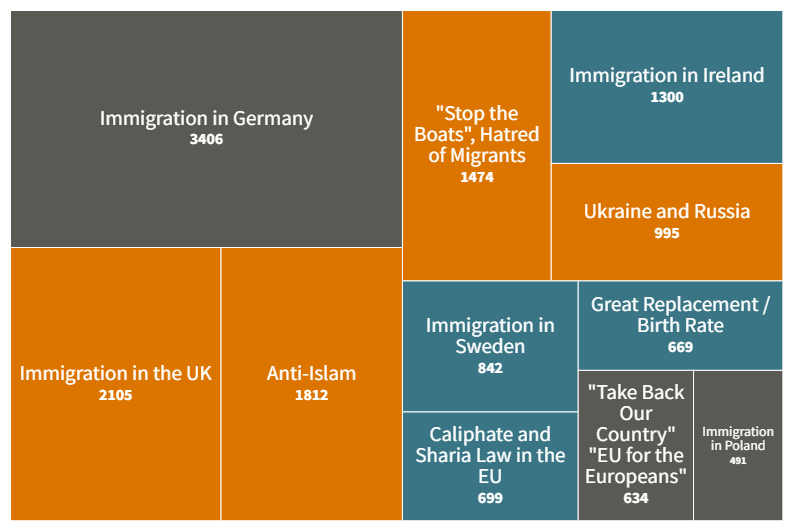

Figure 2. Topic analysis of comments on videos covering immigration

The figure above displays the major identifiable topics in the comment sections of the videos in the dataset relating to immigration.

Despite the majority of the videos in the dataset being in English, nationalist and anti-immigrant sentiment in French, German, and Polish were frequent in the comments. Users echoed common xenophobic slogans, such as “Ausländer Raus!” (“foreigners out”), “send them back!”, and “stop the boats!”. Of all the comments on videos relating to immigration in the dataset, the top three most-liked comments were the same: “Europe for the Europeans”.

Ukraine

Comments under videos relating to the war in Ukraine often reflected the information in the video, with users expressing support for Russia’s invasion (“Russia will win!!!” or “President Putin ❤️”), or thanking the channel for “exposing mainstream media’s lies”. Commenters in this category frequently invoked biblical passages, particularly under videos claiming knowledge of an imminent nuclear escalation. Because many videos from this dataset touched on the ongoing war in Gaza and tensions in the Middle East, many comments also discussed Israel and Palestine. Support for Israel seems to be a particularly divisive topic among commenters. Other commenters expressed support for strongman politicians such former US President Donald Trump or Hungarian Prime Minister Viktor Orban, while decrying US President Joe Biden, German Chancellor Olaf Scholz, and French President Emmanuel Macron.

Climate Change

Commenters under videos relating to climate change often expressed their belief that global warming is “a hoax”. Many users in this section also invoked passages from the Bible, or simply expressed their support for the channel and its hosts. Others expressed support for farmers’ protests in France, Germany, and the United Kingdom. Users also expressed belief in a “deep state” or cabal of government elites as being behind the global push to combat climate change.

Figure 3. Rates of Co-Commenting Across Videos

The above image displays the distribution of users who commented on 15 or more videos in the total dataset.

An analysis of the comments across all videos revealed many users who commented on multiple videos, sometimes across different topics. In total, there were over 240 users who commented on 15 or more videos in the dataset, as can be observed in the graph above. One user was observed to have made comments across 81 separate videos – all of which were related to Ukraine. Comments by this user were often pro-Russian and pro-Iranian in their sentiment, and many were identical (“ The proxy war in Ukraine is the first time NATO has tried to pick on someone their own size and NATO is getting humiliated” was posted as a comment on 15 separate videos). Several other users who commented on 15 or more videos were observed posting identical comments across videos, indicating possible inauthentic behaviour (e.g., ”If you stand with Ukraine you support the WEF, WHO, Military industrial complex, and the Globalist elites” was posted by one user six times across videos). When users commented across topics, it was often on videos related to Ukraine or to the subject of immigration.

Our analysis of the comments shows that, across topics, conspiratorial and eschatological sentiments were common, showing that a broad audience receptive to content encouraging such extreme beliefs is active on YouTube. The similarity of the content in these comments, as well as our identification of many users who posted identical comments across videos, may indicate coordinated and inauthentic behaviour.

Implications of findings and recommendations

The concept of “disinformation narratives” is often used to group multiple and diverse phenomena. These include rumours, inaccuracies, conspiracy theories, election manipulation attempts, or state propaganda. Such conceptual blurring complicates the task of identifying effective responses. The link between disinformation narratives, particularly those related to health crises, conflicts, or elections and public harm has already been well established. Aggressive countermeasures can also pose risks, however, especially regarding potential infringements on the freedoms of expression and of the press.

While we acknowledge these challenges, our analysis suggests that YouTube has not been diligent in implementing minimal measures to address the spread of narratives and claims that have often been debunked by fact-checkers such as EDMO and others in the European Fact-Checking Standards Network. This aligns with findings from the above-mentioned research by Maldita, which shows that YouTube only took action on the 24.5 per cent of the videos flagged by fact checkers, either by removing them, or by adding source indicators or information panels.

Our study shows that a simple approach, based on querying for known disinformation narratives (climate change, immigration, and the war in Ukraine), and particularly when doing so with conspiratorial terms, easily returns a wide range of videos promoting unsubstantiated conspiracy theories or, at the very least, inaccurate information, from both obscure and well-known sources.

YouTube has introduced multiple measures to address misinformation. The platform removes content in three specific cases: (i) when it suppresses census participation, (ii) when it is technically manipulated or doctored in a way that poses significant risk of harm, and/or (iii) when it falsely claims that old footage from a past event is from a current event, posing a serious risk of harm. Besides removal, the platform also takes action against misinformation by reducing recommendations of borderline content – videos that approach, but do not fully violate, its Community Guidelines – while also prioritising authoritative sources for news and rewarding trusted creators. YouTube has also stated that it uses information panels, featuring content from independent third parties, to provide context on topics that are “prone to disinformation” ,such as moon landings, elections, health, and crises. Research has shown that labelling to provide additional context for false or unreliable content can reduce the likelihood of users believing or sharing it, while serving as a non-intrusive measure that upholds freedom of expression.

In our study, we did not assess whether the videos violated YouTube’s Community Guidelines, as fact-checking each video was beyond the scope of this analysis. We can confidently state, however, that a portion of the analysed videos fall at least into the category of “borderline content,” meaning they contain misleading information on sensitive topics that could pose a public risk. We found that YouTube did not systematically use information panels, source indicators, or any other tools to provide additional context or direct users to authoritative sources in the videos analysed in this brief. This omission is of particular concern, given that the three topics for the analysis – climate change, immigration, and the war in Ukraine – are widely recognised by experts and industry as being especially prone to misinformation. Due to limitations to data accessible via the YouTube API, it was impossible to verify whether the platform acted on these borderline videos, such as by demoting them or excluding them from recommendations.

YouTube’s failure to take minimal action, such as utilising information panels or source indicators to counter the spread of debunked misinformation narratives, suggests that the platform is not fully honouring its commitments under the EU’s Code of Practice on Disinformation. Measure 22.7 of the Code specifically states that “relevant Signatories will design and apply products and features (e.g. information panels, banners, pop-ups, maps, and prompts, trustworthiness indicators) that guide users to authoritative sources on topics of particular public and societal interest or in crisis situations.” Moreover, this indicates that YouTube might not have adequately addressed the systemic risks posed by these narratives to the EP elections, and to civic discourse more broadly. The spread of misleading narratives in the months before the Elections could have directly influenced voters, distorting their understanding of key issues and, in turn, influencing their voting choices.

Hateful Content: A Video Featuring Nazi Symbolism and Promoting Anti-Migrant Sentiment

We identified at least one instance of hateful content. A video posted by the account “Let’s Make France Great Again” prominently features Nazi symbolism on several occasions. Approximately every five seconds, for a brief moment, the video displays a well-known poster from circa 1932 featuring the Nazi symbol and the phrase “Wir Arbeiter sind erwacht” (“We workers have awoken”). This poster has been recognised as a piece of historical propaganda from the National Socialist era. The video was also set to a remix of the song “L’Amour Toujours,” which, as noted above, has been repurposed by far-right groups in Europe with the lyrics “Ausländer raus” (“foreigners out”). The video clearly aims to promote anti-immigrant sentiment, as evidenced by hashtags in its description such as #nationalism, #europeforeuropean, #deutschland, and #auslanderraus. This type of content violates YouTube’s policy on violent extremist or criminal organisations, which explicitly states that the platform will remove “content that depicts the insignia, logos, or symbols of violent extremist, criminal, or terrorist organisations in order to praise or promote them.” We reported the video to YouTube. The platform has restricted access to the video in Germany but, as of the time of writing of this brief, it had not made a final decision regarding our complaint. Despite reporting the video from other EU countries, it has not been taken down there.

Challenges Related to Data Access and YouTube’s API

While the YouTube API is relatively straightforward and accessible, future research would benefit from providing the ability to apply more specific filters when generating queries, such as limiting searches to video titles, captions, or both. Additionally, greater transparency is needed regarding the representativeness of the data retrieved through the API, and how closely it corresponds to the results of standard user searches. This would help researchers better understand the real-world transferability of their findings. Furthermore, the YouTube API does not allow researchers to track user comments or activity history in any meaningful way, significantly limiting the ability to investigate inauthentic behaviour or coordinated campaigns. Improved access to such data would greatly enhance the effectiveness of research in these areas. Finally, as mentioned above, as of now, it is impossible to determine the degree to which YouTube demotes or excludes certain videos from its recommendations.

Methodology

For each topic, we qualitatively curated over two dozen unique English-language keyword queries deemed likely to return videos with disinformation about that subject. These queries were often selected based on their connection to disinformation narratives identified in the EDMO report, or with the addition of “conspiratorial keywords” to otherwise innocuous searches (e.g., “exposed”, “the truth about”, “false flag”, “deep state”). For example, for finding disinformation videos relating to the war in Ukraine, queries such as “Zelensky drugs” and “NATO false flag” were tested. The full list of search queries used per topic can be found in the appendix of this report.

We used the YouTube API to conduct searches based on these queries with the country code “DE”, meaning that our results should reflect the user experience of someone performing queries in Germany. For each topic, the videos returned from the YouTube API from each query were added to the dataset. While the queries were in English, several non-English language videos were returned by the YouTube API. This dataset was then manually cleaned to remove videos uploaded by mainstream news network channels, which often only discuss disinformation narratives when debunking them. The remaining videos were then fed into the Digital Methods Initiative YouTube Data Tools’ co-commenting tool, which, for each video in the dataset, identifies the number of identical commenters in the comment section of every other video. This step was added based on the premise that videos of interest to this analysis are promoted to and impact a connected community of users, which can best be identified by looking at the community engaging in commenting on these videos. Every video with a co-commenting threshold below 10 was then dropped from the dataset, resulting in a dataset of 596 unique videos, uploaded by 131 unique channels. The comments and transcripts of these videos were retrieved and analysed across topics with BERTopic. Of the 596 videos, 454 had transcripts that were generated via close captions, and could be retrieved through the API. To enable the topic analysis of the transcripts (some of which derived from videos of between one and two hours in length), we first summarised all transcripts with OpenAI’s ChatGPT 4o with the prompt “Please summarize the following video transcript, presenting the main topics:”. These summaries were then used to create topic analyses for each topic (Ukraine, immigration, climate), using BERTopic.

One limitation of the topic-modelling approach is that BERTopic assigns each video transcript a single topic, even if that video is multiple hours long and may have covered multiple topics. Moreover, while using GPT 4o allowed us to summarise and perform topic modelling on transcripts that would otherwise be challenging for a topic model to interpret (for instance, videos longer than 30 minutes and covering many different topics), frequently, the summaries would drop the more conspiratorial and explicit language in the videos. As a result, the summaries fed into the topic model were more “sanitised” and rational than the original transcripts. For future research, experimenting with different prompts for LLM summarisation may yield summaries that better reflect the original language of the videos.

Appendix

Search queries – Ukraine

| “Zelensky Nazi” | “Zelensky corrupt” | “Zelensky drugs” | “Zelensky exposed” |

| “the truth about Zelensky” | “the truth about NATO” | “NATO exposed” | “Bandera NATO” |

| “NATO mercenaries” | “EU mercenaries ukraine” | “EU invasion” | “NATO azov” |

| “ukraine neo- Nazis” | “Zelensky yacht” | “Zelensky Azov” | “fascist Ukraine” |

| “fascist NATO” | “Ukraine false flag” | “Ukraine hoax” | “Ukraine fake news” |

| “Ukrainian propaganda” | “Putin liberation” | “Zelensky puppet” | “Ukraine puppet government” |

| “Ukrainian war crimes” | “Bucha hoax” | “NATO provocation” | “Ukraine CIA puppet” |

| “Ukraine scandal” | “NATO Nazis” | “Zelensky scandal” | “Ukraine warmongering” |

| “NATO nuclear war” | “NATO world war iii” | “Ukraine hidden agenda” | “Ukraine cover-up” |

| “Ukraine bio labs” | “Ukraine collapse” | “Ukraine destroyed” | “Ukraine apocalypse” |

| “Ukraine deep state” | “Ukraine globalist” | “Ukraine agenda” | “Ukraine steals” |

Search queries – Immigration

| “migrant crisis exposed” | “deep state migrants” | “globalist migrants” | “globalism Islam” |

| “migrants taking over” | “migrant invasion” | “migrant agenda” | “migrant replacement” |

| “great replacement” | “Europe migrant invasion” | “immigrant attack Europe” | “European caliphate” |

| “white genocide” | “migrant revolt” | “migrant crime” | “Europe for Europeans” |

| “Britain migrant invasion” | “Sweden migrant invasion” | “crisis of migrants” | “send them back” |

| “stop the boats” | “Muslim invasion Europe” | “migrant chaos” | “migrants outvoting” |

Search queries – Climate

| “climate agenda exposed” | “climate crisis hoax” | “climate hoax” | “deep state climate” |

| “global warming hoax” | “global warming fake” | “global warming chemtrails” | “global warming agenda” |

| “fake climate science” | “CO2 myths” | “global warming myth” | “climate change myth” |

| “false alarm climate change” | “false alarm global warming” | “farmers climate change EU” | “green energy scam” |

| “green agenda exposed” | “green energy hoax” | “carbon zero exposed” | “carbon tax scam” |

| “climate change debunked” | “global warming debunked” | “climate change no evidence” | “global warming no evidence” |

| “CO2 lie” | “fossil fuel truth” | “climate change alarmism” | “farmers revolt climate” |

| “Klaus Schwab exposed” | “deep state global warming” | “elite agenda climate change” | “eat the bugs” |

This project was made possible thanks to the financial support of the Culture of Solidarity Fund powered by the European Cultural Foundation in collaboration with Allianz Foundation and the Evens Foundation.