This report was written by Francesca Giannaccini, Ognjan Denkovski, and Duncan Allen. This paper is part of the access://democracy project funded by the Mercator Foundation. Its contents do not necessarily represent the position of the Mercator Foundation.

Executive Summary

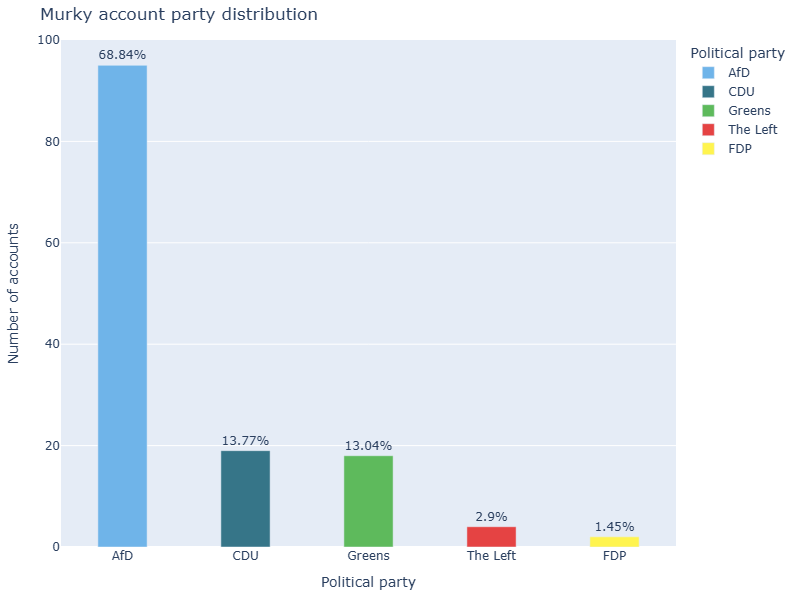

With the German federal elections approaching, DRI continues to investigate the prevalence and spread of “murky” political accounts on TikTok. In previous reporting, DRI found murky accounts to be a persistent problem, one which continues to challenge TikTok’s enforcement of its policies and represents a threat to online electoral discourse on the platform across numerous EU member states.1 In this study, we found that the AfD party remains the largest beneficiary of these accounts in Germany by a significant margin. While we also identified murky accounts supporting other parties, suggesting that they or their supporters may be adopting a similar approach, their follower counts and engagement levels were largely insignificant in comparison.

- Using manual data exploration and collection, we identified 138 murky accounts on TikTok impersonating and/or promoting major German parties or politicians.

- Of these, a majority (95, or 68.8 per cent) of the accounts found promoted the AfD, either by presenting themselves as the profiles of prominent politicians or as official party accounts. Twenty of these AfD accounts were created just ahead of the election, in January 2025. Pro-AfD accounts were not only the most prevalent and easy to identify, but also had the highest number of followers, with 27 having between 5,000 and 130,000 followers.

- Albeit less numerous, we also identified murky accounts promoting other parties, such as the CDU (13.7 per cent), Alliance 90/The Greens (13 per cent), The Left (2.9 per cent), and the FDP (1.4 per cent), most with significantly fewer followers than the average pro-AfD account.

- As of 18 February, TikTok had removed 112 of these accounts, 96 after we reported them and 16 proactively.

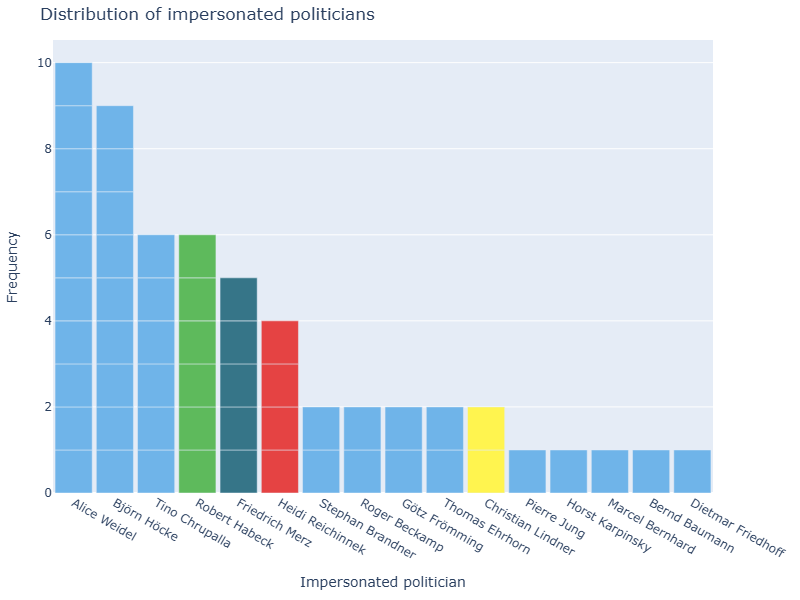

- Most murky accounts (59.4 per cent) impersonated party accounts, rather than individual politicians. The most impersonated politicians were AfD leaders Alice Weidel and Björn Hocke, with ten and nine murky accounts, respectively. Outside of the AfD, Heidi Reichinnek, of The Left, was also impersonated, with all four murky accounts promoting her.

- Of the 937 videos uploaded by murky accounts active between 8 January and 10 February, the vast majority (909, or 97 per cent) were uploaded by accounts promoting the AfD. Murky accounts linked to the AfD also significantly outperformed other parties in the engagement metrics for videos in this period.

Together, these findings paint a concerning picture of the presence and success of murky political accounts on TikTok. While accounts promoting the AfD were the most numerous and active, and generated the greatest engagement – showing the outsized use of this tactic by AfD or its supporters relative to other parties – the presence of murky accounts promoting other parties speaks to the current (and, in the future, potentially much larger) scale and persistence of this issue. While 112 of these accounts have since been removed from by TikTok, the apparent ease with which murky profiles can be created at scale suggests the need for stricter profile creation regulations and more vigilant policy enforcement by TikTok. In addition, most research efforts, such as this one, can always paint only a partial picture of the true extent of issues, given the inaccessible nature of TikTok’s Virtual Compute Environment (VCE), the data access solution that TikTok offers to NGOs, forcing analyses like this to be conducted using time-consuming and limited manual methods.

Introduction

The 2025 German Federal elections are taking place during a particularly polarising election season, marked in large part by the question of how most parties position themselves towards the AfD. Notably, the collapse of the “Brandmauer” (firewall) – a political taboo wherein mainstream parties refrain from voting alongside the far-right – has been met with outrage and widespread demonstrations. Meanwhile, concerns about the role manipulation and disinformation might play in the elections have also grown significantly.2 Such concerns should, perhaps, be particularly pronounced for TikTok, which has emerged as a key battleground for German political discourse, by offering parties a way to reach and influence large numbers of (young) voters.

Among the critical risks related to TikTok are the impersonation of political figures or parties and coordinated inauthentic behaviour, both of which continue to plague the platform and expose significant gaps in its efforts to enforce its own policies. While content posted by verified government, politician, and party accounts (GPPPA) faces several restrictions – such as a lack of access to incentive programmes, monetisation features, and advertising and fundraising options – these limitations do not apply to undeclared, “murky”, political accounts. As a result, anyone can easily create one or more such accounts without disclosure, circumventing TikTok’s stated policies against the promotion and monetisation of political content. DRI, which has been investigating and reporting on these threats since last year’s European Parliament elections, defines a “murky” account as:

A TikTok account of questionable affiliation that presents itself as an official government, politician, or party account (GPPPA) when, in fact, it is not. Murky accounts do not declare themselves as fan or parody pages, and can be interpreted as attempts to promote, amplify, and/or advertise political content.

We have previously argued that the prevalence of murky accounts on TikTok not only exposes clear gaps in the platform’s policy enforcement, but may also constitute a breach of EU law. In particular, TikTok’s poor enforcement may violate the platform’s obligation to identify and mitigate risks to democratic processes and civil discourse in the EU, as outlined in Articles 33 and 34 of the Digital Services Act (DSA). Furthermore, measure h) i) of the European Commission’s Guidelines on Electoral Integrity under the DSA specifically identifies impersonation, fake engagements, and coordinated inauthentic behaviour as potential risks to electoral processes.

Murky accounts both impersonate and can coordinate inauthentic behaviour. They can appear as copies of official political pages, using the same profile picture and descriptions, and sharing the same content. Other times, they may instead feature variations of party logos and slogans to appear as official affiliates. Since TikTok’s verified badges for political and party accounts are optional in the EU (unlike in the United States, where they are mandatory), the proliferation of such deceptive accounts creates important transparency issues, as users may easily mistake them for the official ones. Some murky accounts have been observed operating in tandem, indicating they may be part of an organised effort. In previous research, DRI found clear evidence on Telegram of the coordinated creation and activity of murky accounts on TikTok by the AfD’s youth wing.3 Other accounts may operate independently. Regardless of whether they are part of a coordinated effort, murky accounts tend to exhibit many profile similarities with and amplify consistent narratives from other ideologically like-minded murky accounts. This type of activity falls within TikTok’s own definitions of “deceptive behaviour”, published in the wake of the November Romanian elections, which specifically includes impersonation attempting to manipulate or corrupt public debate to impact the decision making, beliefs, and opinions of a community.4

Our team’s previous investigations into inauthentic behaviour on TikTok during the European Parliament elections found murky accounts of politicians and parties in Austria, Belgium, France, Germany, Italy, Poland, Portugal, Spain, and Sweden.5 Our research also contributed to a slew of evidence that far right Romanian presidential candidate Călin Georgescu’s surge in popularity was artificially amplified.6 Yet, despite extensive reporting and many of these profiles being easily identifiable through manual searches, we found that TikTok has failed to proactively address this issue. Once reported or flagged, the platform is often swift to remove the accounts for violating platform policies, yet little appears to have been done to prevent the creation and emergence of new murky account networks.

With the German 2025 federal elections upcoming, we once again investigated the prevalence and reach of murky accounts on TikTok, and assessed the extent to which the platform is enforcing its own policies and complying with EU law. In addition to flagging such accounts, we also collected key data about their characteristics and activities, such as follower counts, numbers of videos, engagement data, and more. Due to difficulties in collecting data via the TikTok VCE, the data collection for this project was conducted manually.

Our findings reveal the scope of murky account activity and reach on the platform, and reveal which German parties and politicians are benefiting the most from such promotion. As we do not have sufficient empirical data to make claims about any coordination efforts, we leave this evaluation to our readers.

Results

Our search for murky accounts resulted in the identification of 138 accounts impersonating German politicians and parties.

Murky account affiliation and features

The AfD was the most frequently impersonated party in our sample, with 95 out of 138 accounts (68.8 per cent). We found far fewer accounts supporting other parties, with 19 CDU accounts (13.7 per cent), 18 Alliance 90/The Greens accounts (13 per cent), four The Left accounts (2.9 per cent) and two FDP accounts (1.4 per cent). While the data clearly shows much stronger presence in support of the AfD, it also shows that murky accounts are being deployed or at least have been set-up to promote narratives and manipulate algorithms for messaging across the political spectrum.

Figure 1

Candidate and politician impersonation as a secondary tactic

Most of the murky accounts we found impersonated political parties (82 of the 138 accounts, 59.4 per cent) rather than individual politicians. Of these, 56 were variations of the AfD party page, 14 of the CDU’s, and 12 of Alliance 90/The Greens’.

We identified accounts impersonating 16 politicians, 12 of whom were from the AfD. Party leaders such as Alice Weidel (ten), Björn Höcke (nine), and Tino Chrupalla (six) had the highest number of impersonators.

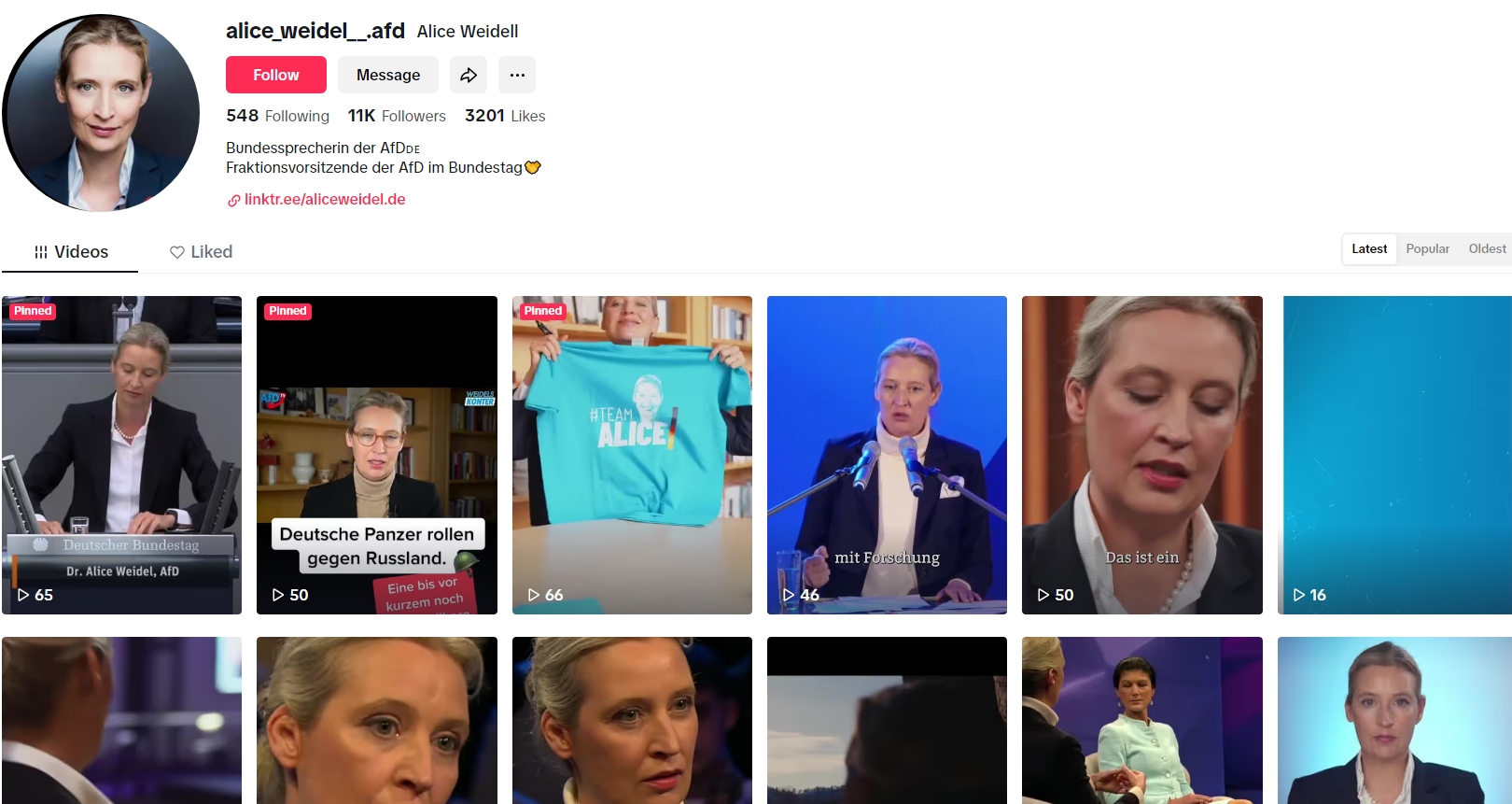

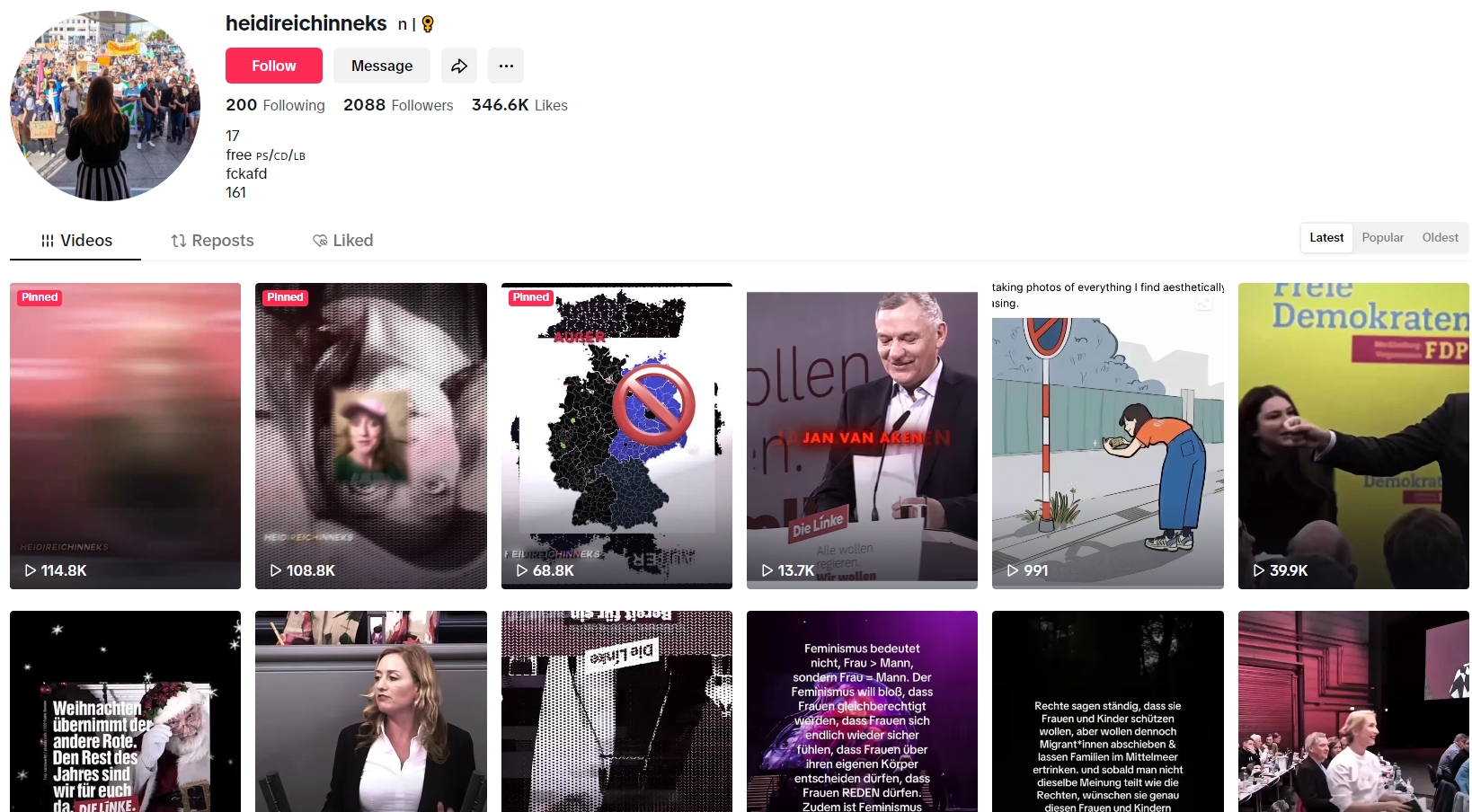

Image 1

Above: A screenshot of a murky account impersonating Alice Weidel (AfD), with more than 11,000 followers

Above: A screenshot of a murky account impersonating Alice Weidel (AfD), with more than 11,000 followers

All of the murky accounts identified promoting The Left (four) impersonated Heidi Reichinnek, the party’s top candidate, and as we show later in the data, these four accounts achieved significant engagement success. Similarly, the two murky accounts identified promoting the FDP were those impersonating lead candidate Christian Lindner. Robert Habeck (six murky accounts) and Friedrich Merz (five murky accounts) were the only impersonated candidates for Alliance 90/The Greens and the CDU.

Figure 2

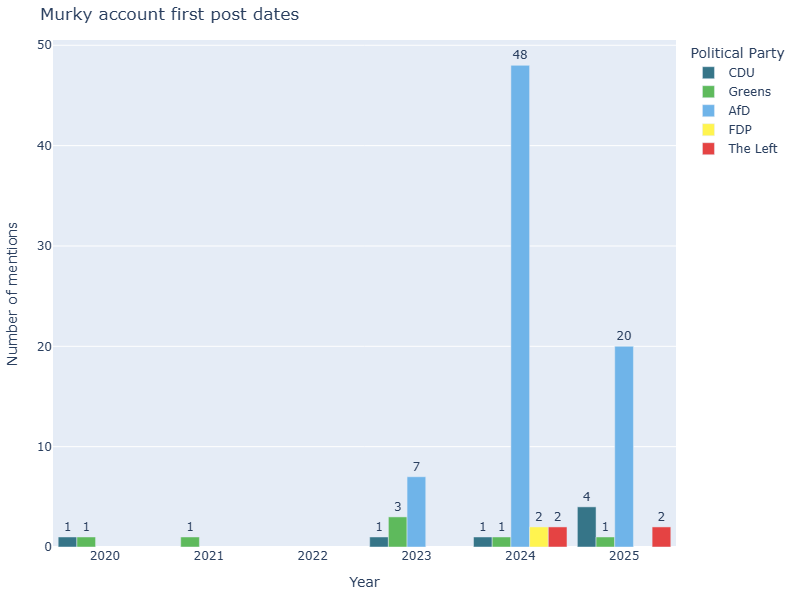

When did these accounts publish their first videos?

Due to restricted access to APIs, we were unable to determine the creation dates for the accounts. Instead, we relied on estimations based on the upload date of each murky account’s first video.7

While we identified instances of murky accounts that began their activity quite some time ago, including cases from 2020 and 2021, most accounts began actively uploading content only after 2023. Forty-eight of the murky accounts that began uploading content in 2024 were impersonating the AfD and its politicians. An additional 20 pro-AfD murky accounts appeared in January 2025 alone, indicating a possible scaling up ahead of the elections. The findings from murky accounts in favour of the CDU and The Left show that the number of murky accounts linked to these parties on TikTok also increased as the elections drew closer, suggesting a potential broader adoption of this approach as we approach the election date.

Figure 3

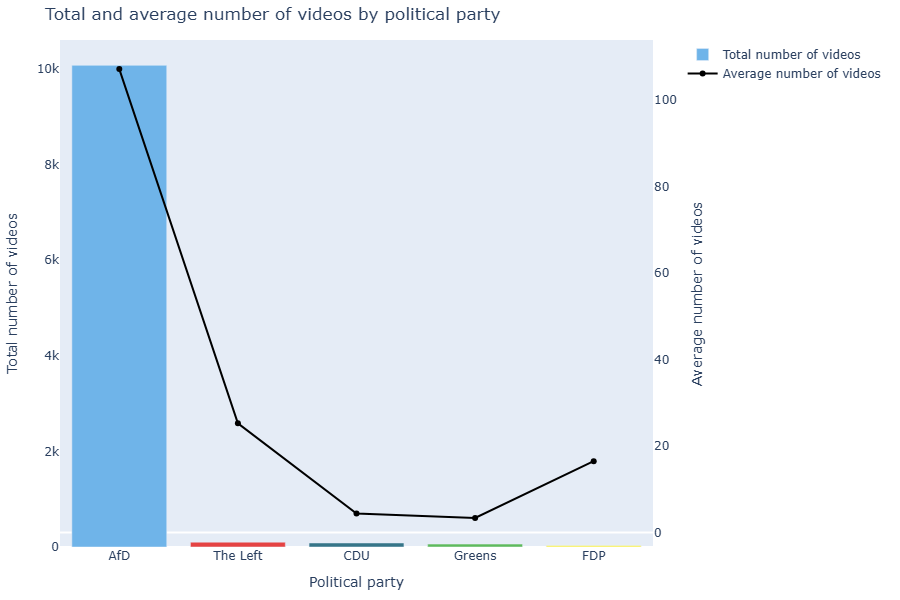

Average and total number of videos by party affiliation

AfD murky accounts published a total of 10,074 videos, significantly more than the accounts of the other parties. The Left’s murky accounts published a total of 101 videos, the CDU’s 84, the Alliance 90/The Greens’ 61, and the FDP’s 33. Likewise, accounts promoting the AfD far surpassed those for other parties in the average number of videos uploaded per account (107.17).

Figure 4

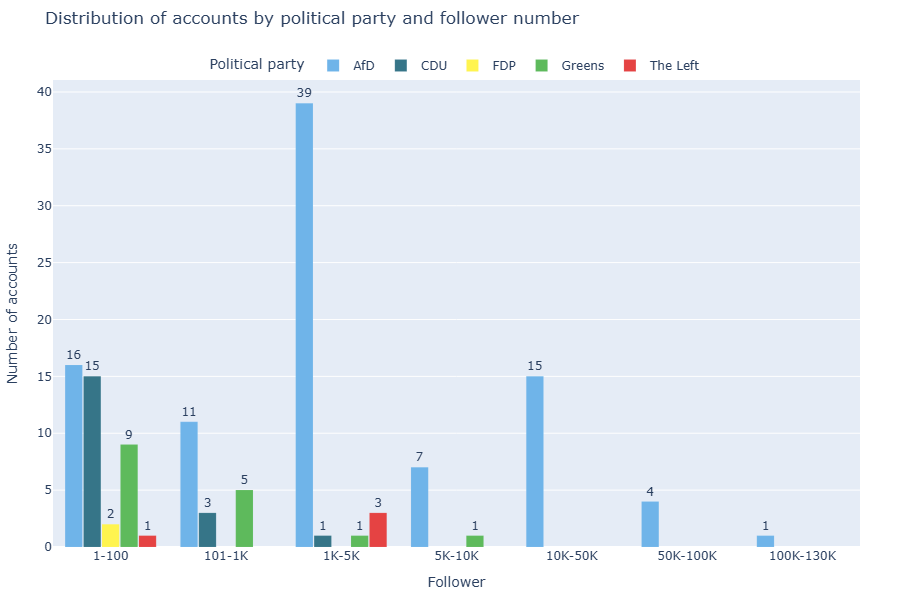

Understanding murky account follower numbers and likes

To assess the reach of the identified murky accounts, we collected data on their number of followers, which we demonstrate below as distributed across 7 follower brackets: 1-100; 101-1,000; 1,001-5,000; 5,001-10k; 10,001-50,000; 50,001-100,000; and 100,001-130,000.

While murky accounts linked to other parties were mostly concentrated within the first three follower brackets (1-5,000 followers), AfD murky accounts stood out, with 27 accounts exceeding 5,000 followers. Among them, 15 had from 10,000 to 50,000 followers, four had from 50,000 to 100,000, and one account had as many as 128,600 followers. Accounts tied to The Left and Alliance 90/The Greens were the only other parties to cross the 1,000-follower threshold.

Figure 5

The only two accounts found for the FDP, both impersonating lead candidate Christian Linder, had under 100 followers. The murky accounts linked to the CDU generally had a low follower count, with most falling between one and 100. The accounts tied to Alliance 90/The Greens showed slightly higher success rates, with one account having between 5,000 and 10,000 followers.

We also considered the total number of likes received by each account, from which we determined the average and total number of likes per party affiliation. AfD murky accounts garnered the highest total number of received likes. The Left slightly surpassed the AfD in average likes, showing higher levels of engagement achieved with the 4 accounts we identified, which does suggest that even low-effort use of murky accounts can have meaningful impact on opinion-shaping.

| Political party | Average received likes | Total received likes |

| AfD | 88,305.36 | 8,300,704 |

| Die Linke | 93,453.25 | 373,813 |

| Grünen | 3,155.50 | 56,799 |

| CDU | 74.68 | 1,419 |

| FDP | 59 | 118 |

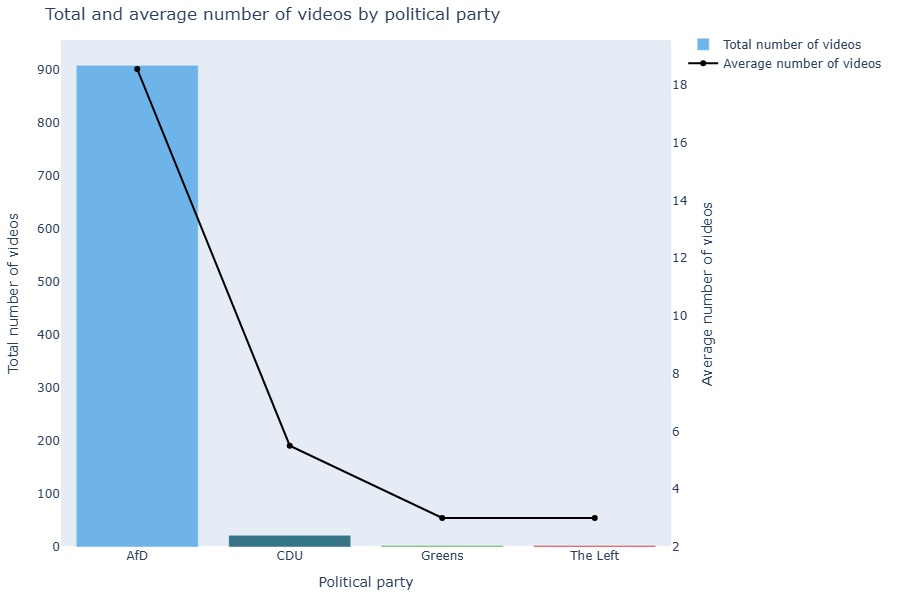

Activity and engagement analysis between 8 January and 10 February

We decided to set a specific period of interest, 8 January to 10 February, for which we could manually assess the extent of posting, reach, and engagement performance of videos. During this period, 55 of the 138 identified accounts were active and uploading content, publishing a total of 937 videos.

Among these videos, 909 were shared by accounts impersonating the AfD or their political candidates, while the remaining videos came from the CDU, The Left and Alliance 90/The Greens, with 22 from the CDU, and three each from The Left and Alliance 90/The Greens. No videos were published in this period by the accounts impersonating the FDP or its officials.

Figure 6

Murky accounts impersonating the AfD had an average of 5,318.8 views per video. In comparison, accounts impersonating The Left, the CDU, and Alliance 90/The Greens averaged less than 400 views, and had as few as 18 in the case of Alliance 90/The Greens. The AfD accounts further garnered an average of 597.8 likes per video, far outperforming all other parties. Additionally, the AfD accounts also outperformed other murky accounts in comments and shares, averaging 44.2 and 53.0 per video, while all other accounts had an average of less than 2 for both metrics.

| Political party | Views per video | Likes per video | Comments per video | Shares per video |

| AfD | 5,318.84 | 597.84 | 44.19 | 52.96 |

| CDU | 383.77 | 10.77 | 1.73 | 0.27 |

| The Left | 188.33 | 28.67 | 1.33 | 0 |

| Greens | 18 | 4 | 0.67 | 0 |

What do these findings show?

Overall, our analysis of 138 murky accounts paints a clear picture of the continued prevalence and magnitude of the issue of murky accounts on TikTok. With reporting based on data collected up to 10 February, nearly two weeks before the elections, we believe that murky accounts have shaped and will continue to shape political discourse on TikTok (and elsewhere), possibly at an increasingly higher level as we approach election day.

The analysis also points a clear picture of the key beneficiary of murky account activity on TikTok – the AfD. Not only are the AfD murky accounts the most numerous in our dataset (68.8 per cent), but they also consistently, and by a wide margin, outperform all other murky accounts identified on all metrics considered, including activity, reach, engagement, and followers. Furthermore, our data shows that more of these accounts appeared or became active as the election date approached, and that most content generated by murky accounts in the period between 8 January and 10 February was generated by the pro-AfD accounts, with great engagement success. Given the balanced time distribution in looking for murky accounts associated with each party, we believe that our sample accurately reflects the broader picture of murky account distribution. At the same time, we should not neglect the fact that our findings also show that other parties or their supporters may have also begun implementing murky account activity as a campaigning tactic. Based on the findings of this report, however, this is currently very limited, with only the four accounts identified in favour of The Left achieving notable engagement figures.

The proliferation of multiple accounts for the same political parties, figures and candidates poses a systemic risk to civic discourse and electoral processes in the European Union. This proliferation undermines the integrity of TikTok’s service in political contexts, as it misleads voters, distorts perceptions of online support for specific parties or candidates, and provides an easy avenue to circumvent TikTok’s stricter policies regarding government, politician, and party accounts.

Methodology

We initially envisioned this project as one that would be conducted via TikTok’s API. Per our assessment, however, the API and corresponding virtual compute environment (VCE) proved to be poorly designed and difficult to work with, presenting numerous technical and operational obstacles. These limitations resulted in resorting to manual data collection methods, significantly impacting this report’s scale and scope. Without API access, gathering key indicators, such as “average likes”, required manually gathering the number of likes on each video from suspected murky accounts – a time-consuming process given the volume of content. Similarly, investigating connections between murky accounts, such as shared followers or mutual engagements, became impractical without the ability to automatically extract and analyse follower and following lists.

Data collection

Data collection for this analysis was conducted in several sessions between 29 January and 10 February 2025. During the data collection phase, we also received valuable external contributions and reports from an independent journalist.8 The data collection process for this study was carried out through TikTok’s “Explore” section, where we searched for the names of political candidates or parties. In doing so, we tried to identify all accounts that could be classified as “murky”, based on our authenticity assessment criteria, described below. These accounts were carefully reviewed and flagged accordingly.

As this project aimed at investigating all identifiable murky accounts, regardless of political affiliation, the data collection process was applied consistently and for equal periods of time across all political parties and influential figures (lead candidates, party leaders, and prominent politicians), ensuring a comprehensive and unbiased view of the murky account environment.

Data collection efforts were time consuming, taking three researchers over five working days of querying for accounts and recording indicators.

Detecting murky accounts with authenticity indicators

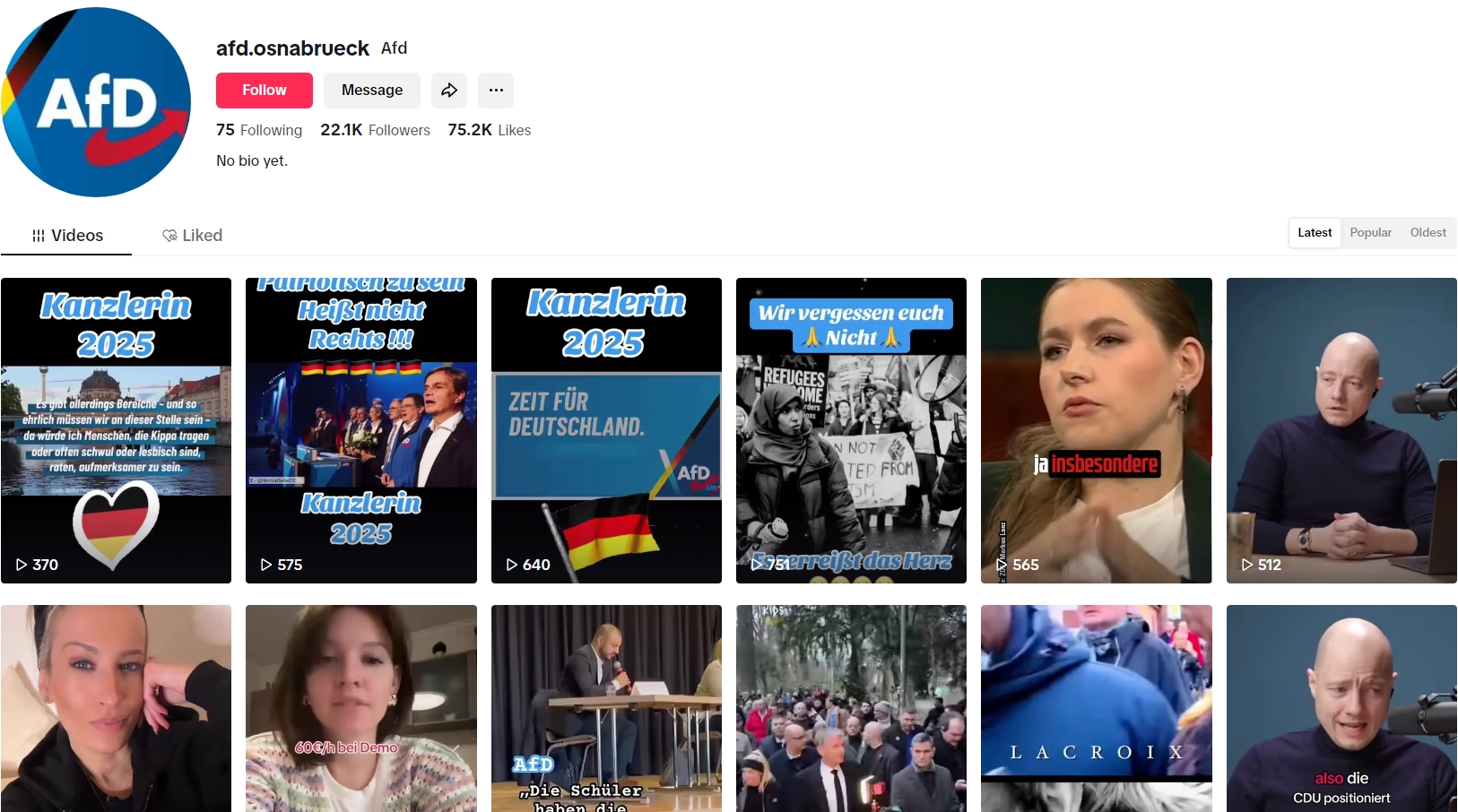

To assess whether an account should be classified as murky, we developed a comprehensive process for authenticity assessment. We began by analysing account usernames, specifically looking for slight variations in usernames that closely mimic legitimate political accounts, such as the addition of extra letters, numbers, or symbols. For example, several of the accounts we found impersonating AfD main candidate Alice Weidel had handles that consisted of her name along with a digit (e.g., alice_weidel_afd_1, alice_weidel_4, alice_weidel1759). Others’ usernames simply suggested clear official affiliation (e.g., afd.osnabrueck), without further verification in the profile information. We also examined the profile picture, looking for signs of visual misrepresentation or theft, while also taking note of the overall credibility of the profile. We further assessed behavioural patterns, looking for irregular activity, such as sudden bursts of posts following long periods of inactivity or an unnatural posting frequency or strategy.

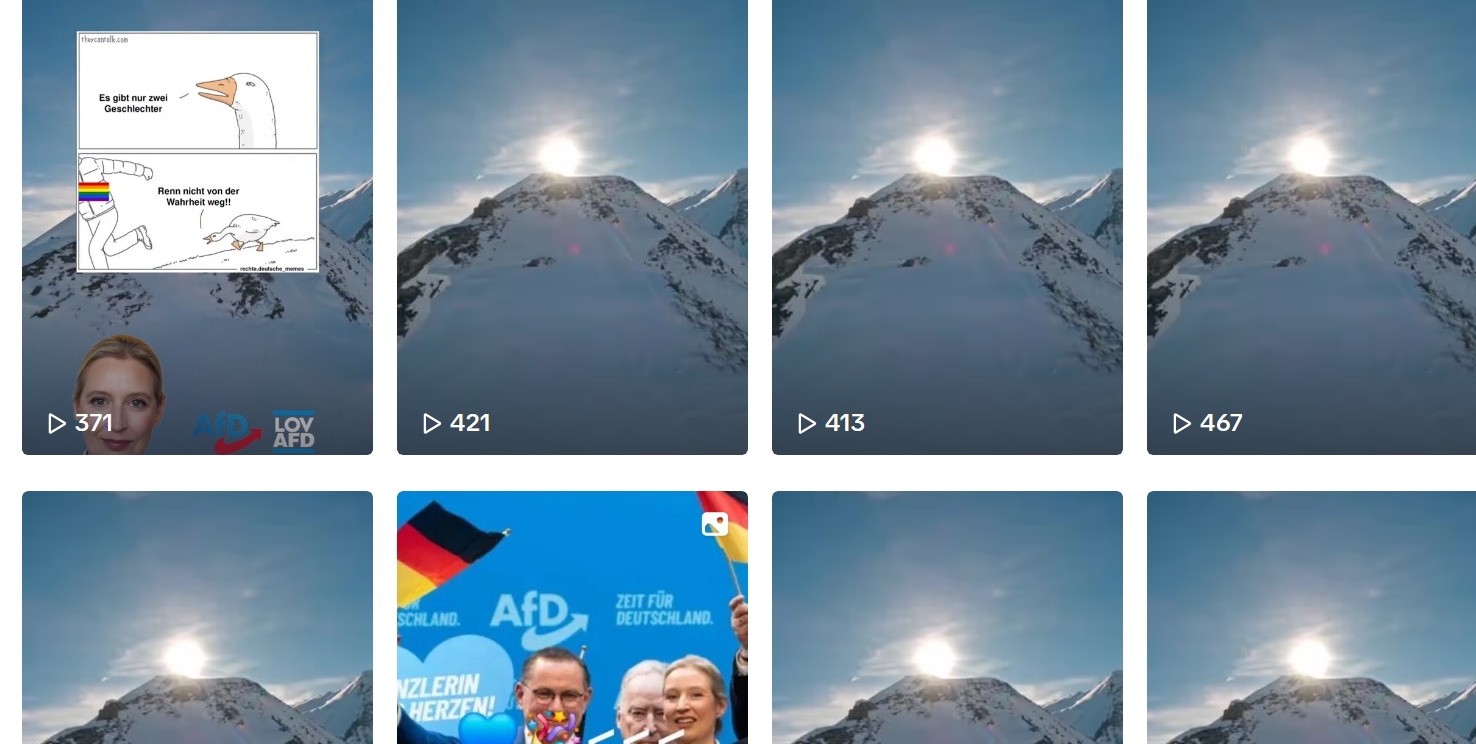

Image 2

Above: A murky account claiming to be the AfD Osnabrueck page. Despite featuring an AfD logo as its profile picture, it is not verified, and cannot be confirmed as an official AfD affiliate.

Verification status was another key factor – if the account was verified, this led us to not classify it as murky. Additionally, we checked for the absence of a “fan page” or “supporter” label, which would have immediately excluded the account from our analysis, since this disclosure is considered legitimate by TikTok guidelines. We believe it’s important to note, however, that exclusion based on a simple label may be insufficient, as fan pages nonetheless represent potential disruption of public discourse, via the amplification of content in a misleading manner.

Image 3

Above: A screenshot of one murky account impersonating Heidi Reichinnek, the top candidate of The Left. All murky accounts linked to the party impersonate this candidate.

While not all observed murky accounts were actively publishing content, where possible, we also examined the content shared to help us determine the authenticity of the account. In previous research, we found that murky accounts often share monothematic posts, frequently repost content, or disseminate fabricated or AI-generated media. Poorly written descriptions or translations, excessive use of hashtags, and repeated use of manipulated visuals were also treated as indicators of inauthenticity.

Image 4

Above: A screenshot of a murky account impersonating the AfD. At a first glance, the content appears to be simple landscape images repeatedly shared but, on closer inspection, we can see pro-AfD content overlaid on much of the content. We interpret this approach of repeatedly sharing slight variations of content along with political messaging as a strong indicator of inauthenticity.

Taking this wide range of indicators into account resulted in a high threshold for an account to be considered as “murky”, leading to what we believe is a data set based on a very small number of falsely classified murky accounts (false positives), if any.

Metrics collected

Once murky accounts were identified, we collected a range of metrics from each account. This included noting the political party or figure the account was impersonating, as well as the username and display name associated with the account. We gathered quantitative data, such as the number of followers, the number of accounts the account was following, and the number of likes each account received. The most popular video for each account was examined in terms of its view count, which provided insight into the reach of the account’s content.

For content published by these accounts between 8 January and 10 February, we also collected the number of likes, comments, and shares per video, allowing us to derive engagement metrics for content posted in this pre-election period.

References

1. Daniela Alvarado Rincón & Michael Meyer-Resende, “The big loophole (and how to close it): How TikTok’s policy and practice invites murky political accounts”, DRI, 22 July 2024.

2. John Shelton, “Germany: Nearly 90% of voters fear manipulation”, DW, 6 February 2025.

3. DRI, “Local Insights, European Trends: Case Studies on Digital Discourse in the 2024 EP Elections”, 13 August 2024.

4. Brie Pegum, “How TikTok counters deceptive behaviour”, TikTok, 9 December 2024.

5. DRI, “Disinformation Concern: Inauthentic TikTok Accounts that Support Political Parties”, 24 May 2024.

6. Michael Meyer Resende, Daniela Alvarado Rincón “Manufactured Support: How Inauthentic Activity on TikTok Bolstered the Far-Right in Romania”, DRI, 28 November 2024.

7. We excluded 43 accounts from this part of the analysis, as 41 had no published content, whereas two were removed by TikTok before we could complete data collection. Therefore, this analysis is based on the remaining 95 accounts. Although the excluded accounts did not publish any content, we cannot say whether or not they played a role in boosting engagement or coordinating with other accounts.

8. Several accounts were identified and shared with DRI by independent journalist Menna Ayman.