Executive Summary

This report presents new evidence that inauthentic political activity on TikTok continues to be a concern during election campaigns in Europe. DRI’s latest monitoring focused on Romania, where voters participated in a second holding of the country’s presidential election, following the Constitutional Court’s December 2024 annulment of the results from the first run of the election, in November. The Court’s decision was based on its finding of foreign interference on social media, particularly on TikTok. In the five weeks of monitoring ahead of the May 2025 election, we identified 323 murky accounts impersonating candidates or political parties. These findings highlight that significant numbers of murky accounts remain active on TikTok in election contexts, underscoring the urgent need for the platform to take more adaptive and proactive measures to prevent this inauthentic behaviour.

Key findings

- We were able to collect data from 248 of the 323 murky accounts identified as impersonating candidates or political parties; the remaining accounts were shut down or removed by TikTok before we could complete data collection. We reported 205 of these accounts to TikTok – specifically, all of the active accounts at the time of flagging. The platform subsequently removed 184 of these.

- Of the 248 murky accounts for which we could complete full data collection and analysis, 89 (35.2 per cent) were supporting C’0103lin Georgescu, the winner of the 2024 vote, who was denied candidacy for the 2025 campaign. Elena Lasconi, the candidate of the liberal party Save Romanian Union (USR), was impersonated by 36 accounts (14.2 per cent), followed by the right-wing Alliance for the Union of Romanians (AUR) candidate, George Simion, with 28 accounts (11.0 per cent), and the independent candidate Nicu’0219or Dan, who ultimately won the 2025 election in the second round, with 21 accounts (8.3 per cent).

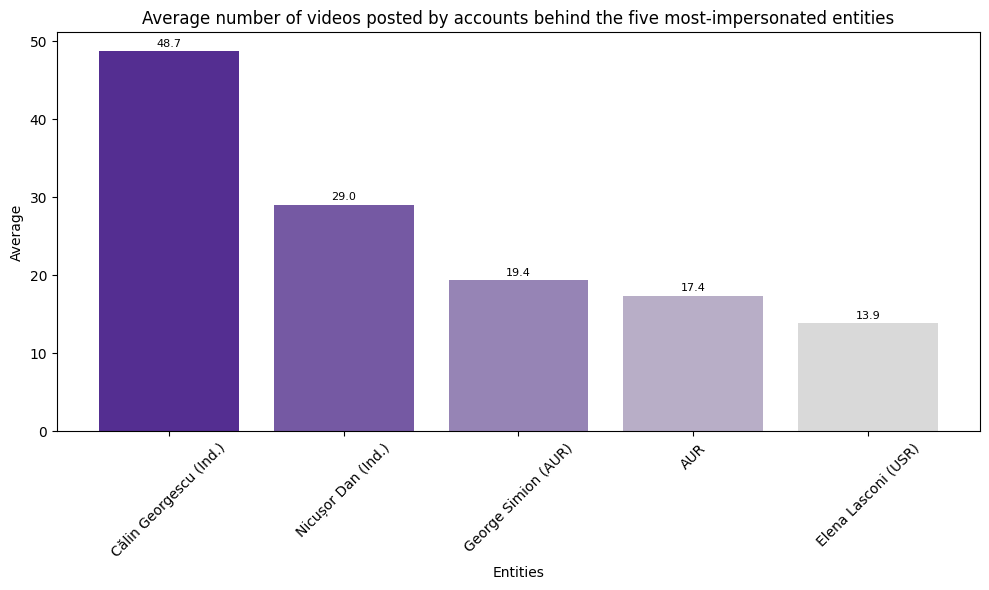

- Accounts impersonating Georgescu were not just the most prevalent, but also stood out as the most active, publishing an average of 48.7 videos each – more than double the output of most other impersonated figures, suggesting strong continued efforts to maintain his political visibility. Additionally, this murky account campaign surrounding Georgescu can be interpreted as a way to advance Simion’s candidacy, given the latter’s role as his political successor and his willingness to name Georgescu as prime minister in case of victory.

- In terms of impact, Simion may have benefitted most from the use of murky accounts. Despite having fewer accounts and publishing fewer videos than Georgescu, the accounts linked to Simion achieved the highest average engagement, at nearly twice that of Georgescu and four times that of the eventual winner, Dan. In absolute numbers, Georgescu still led, with 1, 176, 491 total hearts, followed by Simion, with 745, 892. It is important to note that some, or even much of the engagement generated by the accounts investigated in this study may not be authentic, though the degree of this was not further explored in our analysis.

Introduction

On 4 May 2025, Romanians returned to the polls to elect a new president, following the annulment in December 2024 of the election results from the first running of the election the previous month. The runoff, held on 18 May, ended with the pro-EU, independent candidate Nicu’0219or Dan securing victory, with 53.8 per cent of the votes, defeating the AUR candidate, George Simion, who had led after the first round of voting. These elections followed the annulment by the Constitutional Court of an electoral process tainted by various negative elements, including foreign interference and inauthentic online activity, in which TikTok played a significant role.

DRI has been monitoring this electoral process from November 2024, flagging inauthentic accounts on TikTok ahead of the Romanian Intelligence Service confirming the foreign interference. During the 2024 cycle, DRI identified 114 inauthentic TikTok accounts impersonating candidate and party accounts. After flagging these to TikTok, the platform removed 105, on the grounds they violated their impersonation policy.

Building on our previous efforts, we refined our methodology to enable more systematic and timely monitoring of inauthentic TikTok activity during the 2025 campaign. This updated approach introduced weekly data collection over five weeks, and was structured in two phases: first, the identification of accounts impersonating official political accounts; and second, targeted data collection from these accounts. This enhanced methodology gave us a more detailed picture of the evolving landscape of murky accounts.

As shown in Table 1, over the five weeks of monitoring, we identified 323 murky accounts and were able to gather data from 248 accounts before the others became unavailable for full data collection, as a result either of the volatility of such murky accounts or of TikTok’s limited efforts to remove accounts proactively.

As we approached the first round, we flagged 205 murky accounts to TikTok, specifically, all accounts still active of the 248 for which full data collection had been completed. Out of these, TikTok removed 184, largely citing the violation of its impersonation policy. While it is positive that TikTok rapidly removed most of these accounts, and this demonstrates the platform’s capacity to act when presented with evidence, it also raises questions about gaps in its proactive enforcement.

Table 1. Overview of data collection dates and number of identified murky accounts

|

Query collection week and date |

Identified murky accounts |

Murky account data collected |

|

180 |

129 |

|

44 |

44 |

|

25 |

22 |

|

33 |

23 |

|

41 |

30 |

|

Total |

323 |

248 |

Main Findings

Murky Account Distribution and Characteristics

First, we investigated the distribution across candidates and parties of the identified murky accounts. Most of the accounts impersonated political candidates, a total of 11, while six impersonated political parties. Despite his disqualification as a candidate for the 2025 election, we included in this analysis accounts impersonating C’0103lin Georgescu, whose victory in the 2024 election was annulled by the Supreme Court decision, based on the outsized amplification his candidacy received in that campaign and the influence his continued online prevalence may have had on Simion’s campaign.

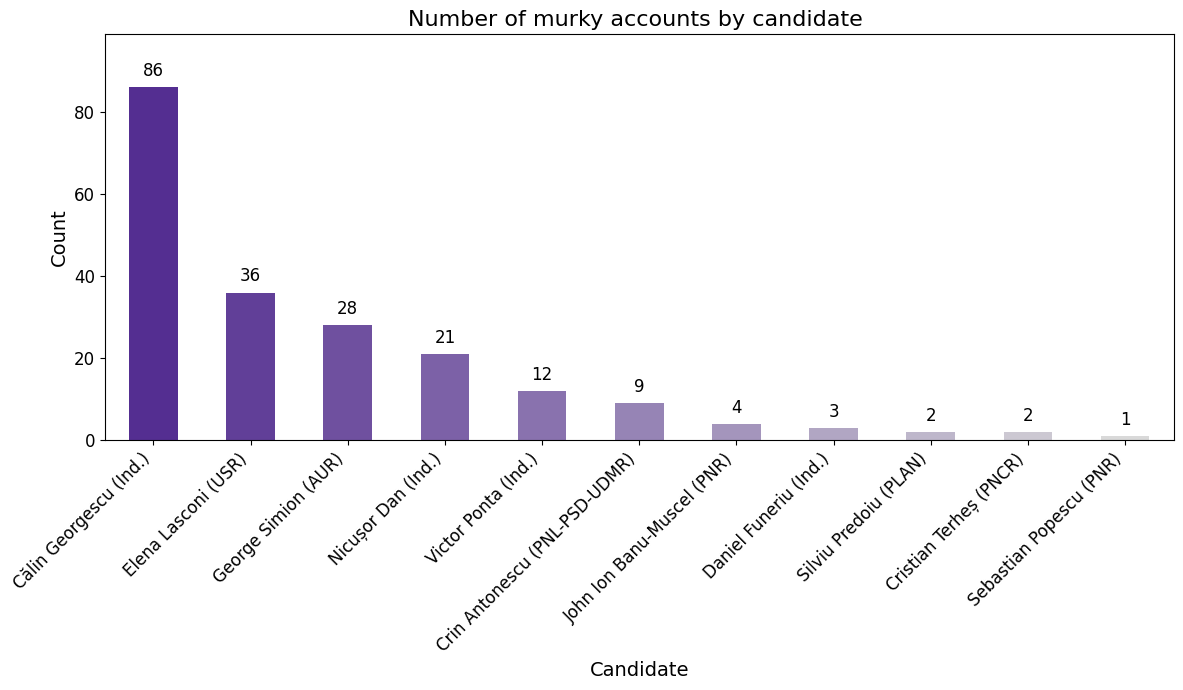

Georgescu emerged as the most frequently impersonated figure, by 86 out of the 248 accounts (34.7 per cent). He was followed by the USR candidate, Elena Lasconi, who was impersonated by 36 accounts (15.5 per cent). The finalists for the second round, George Simion and Nicu’0219or Dan, were impersonated by 28 (11.3 per cent) and 21 (8.5 per cent) of the accounts, respectively. Of the 11 candidates for the first round, we identified murky accounts impersonating all except Lavinia ‘0218andru from the Social Liberal Humanist Party (PSUL).

Figure 1.

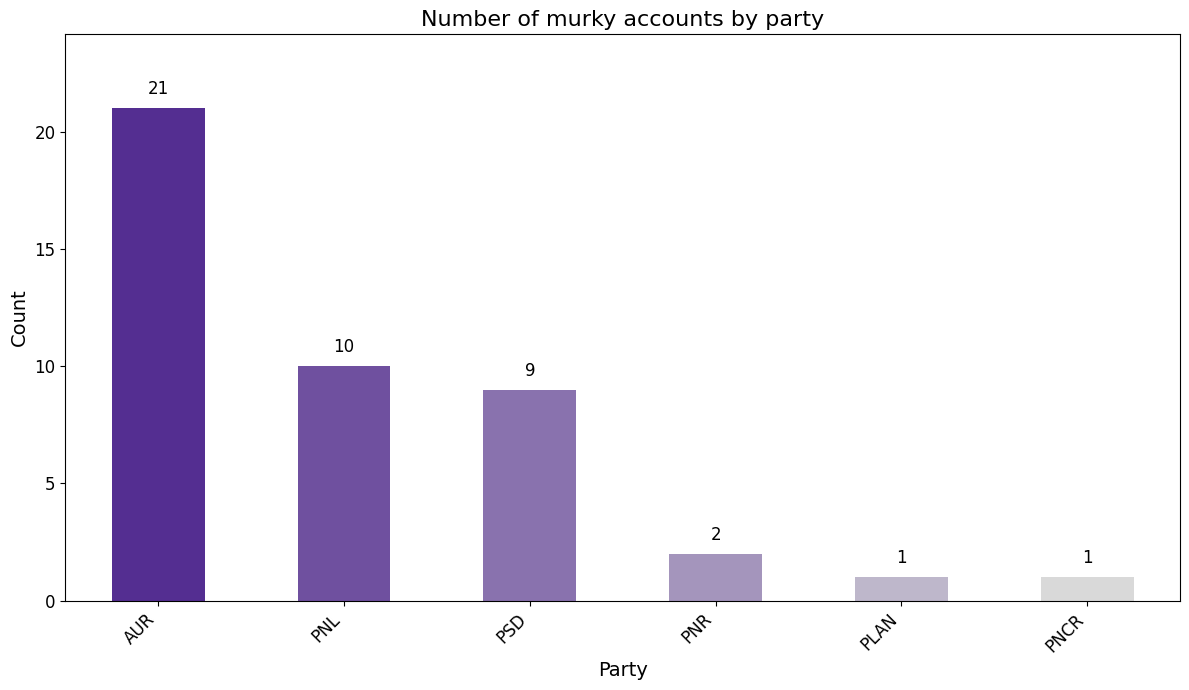

Among the political parties, Alliance for the Union of Romanians (AUR) was the most frequently impersonated, with 21 murky accounts, many of which included content promoting its candidate, Simion. The National Liberal Party (PNL) and the Social Democratic Party (PSD), which both endorsed the same candidate, Antonescu, followed, with 10 and 9 murky accounts, respectively. Other parties, including the New Romania Party (PNR), the National Action League Party (PLAN), and the Romanian National Conservative Party (PNCR), were impersonated to a lesser extent, appearing in only one or two accounts each.

The identification of murky accounts promoting nearly all Romanian parties and candidates highlights that the creation of such accounts is a widespread tactic employed across the entire political spectrum. This pervasive use suggests a strategic effort to influence public perceptions and election outcomes through impersonation, regardless of political affiliation.

Figure 2.

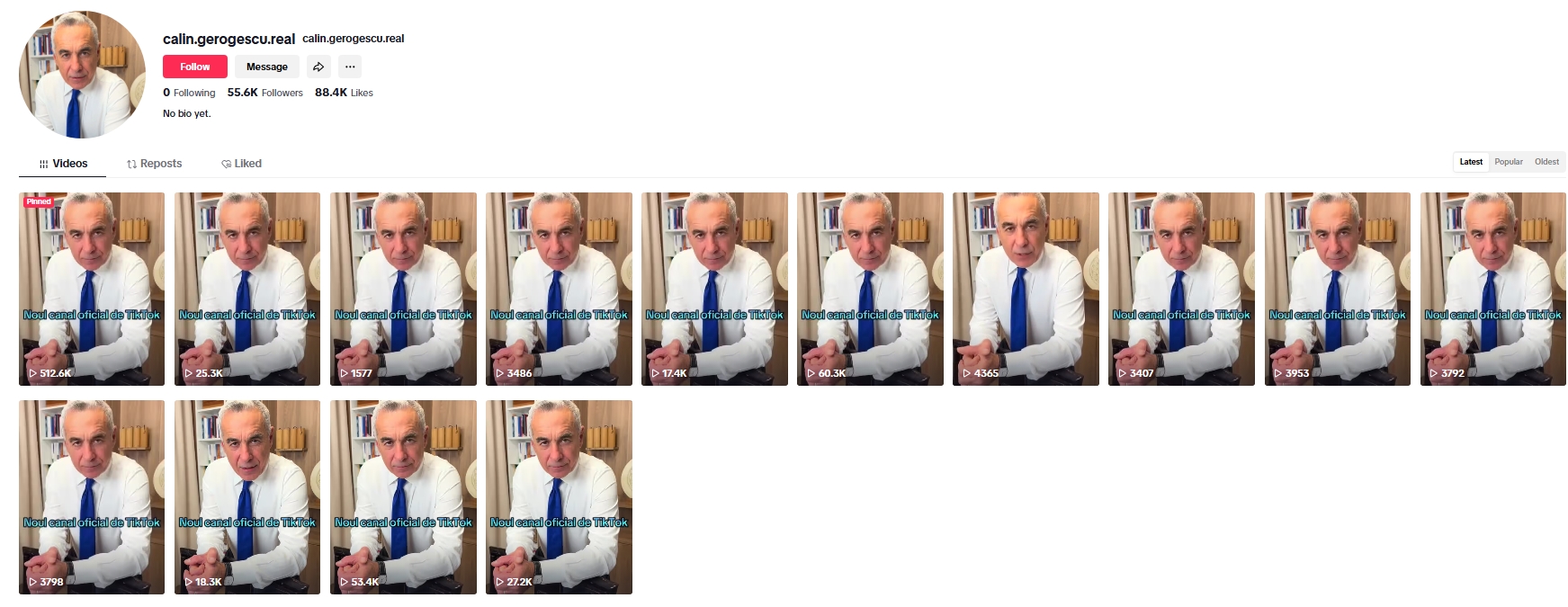

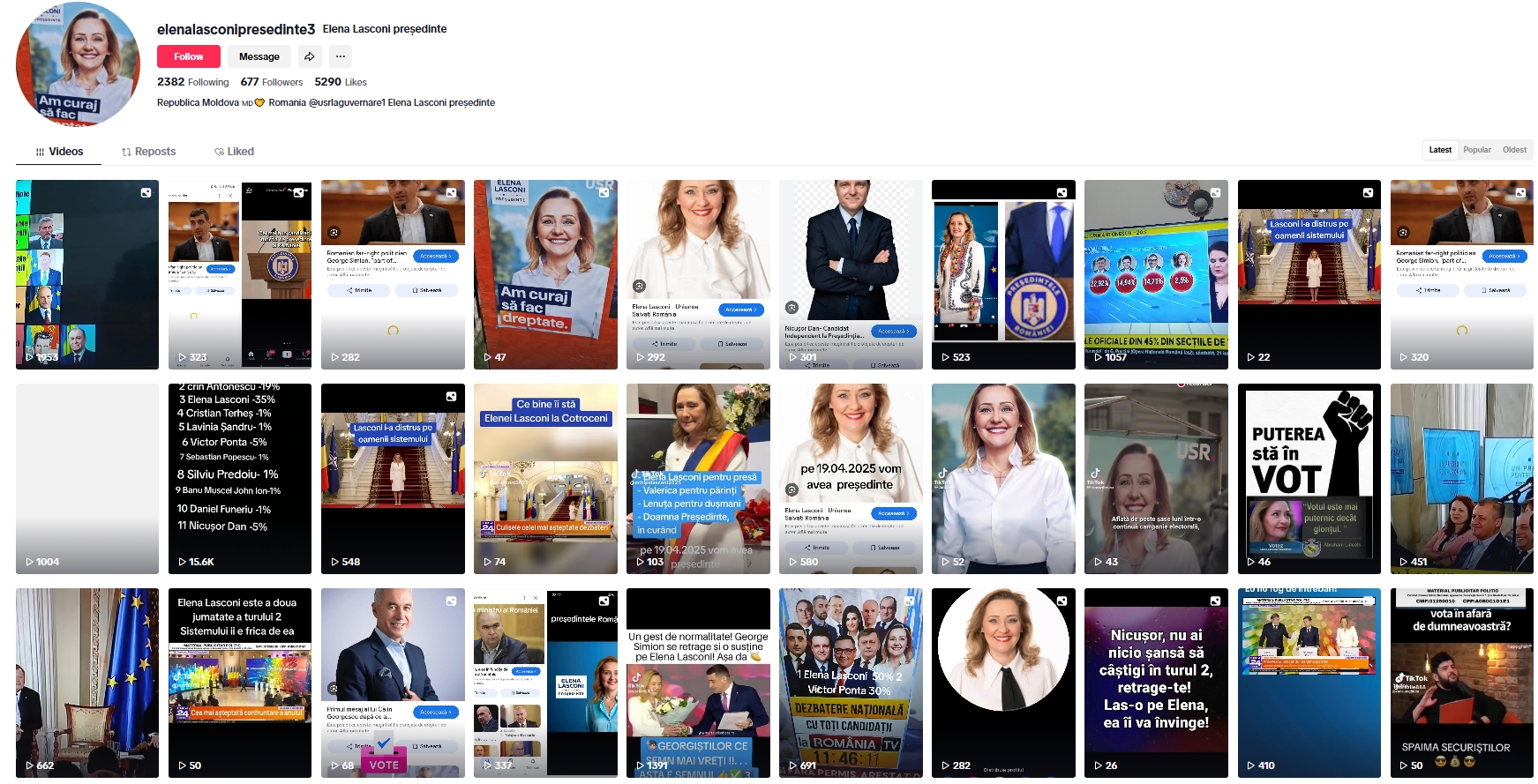

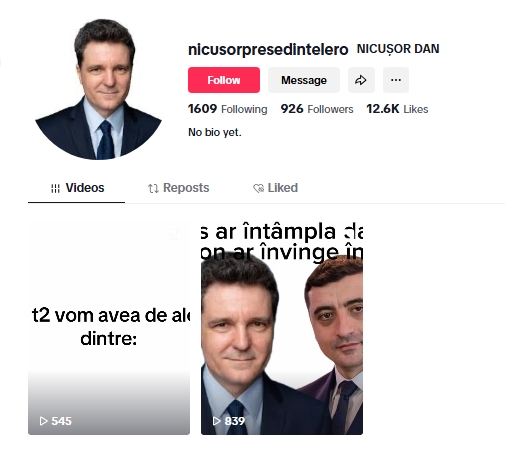

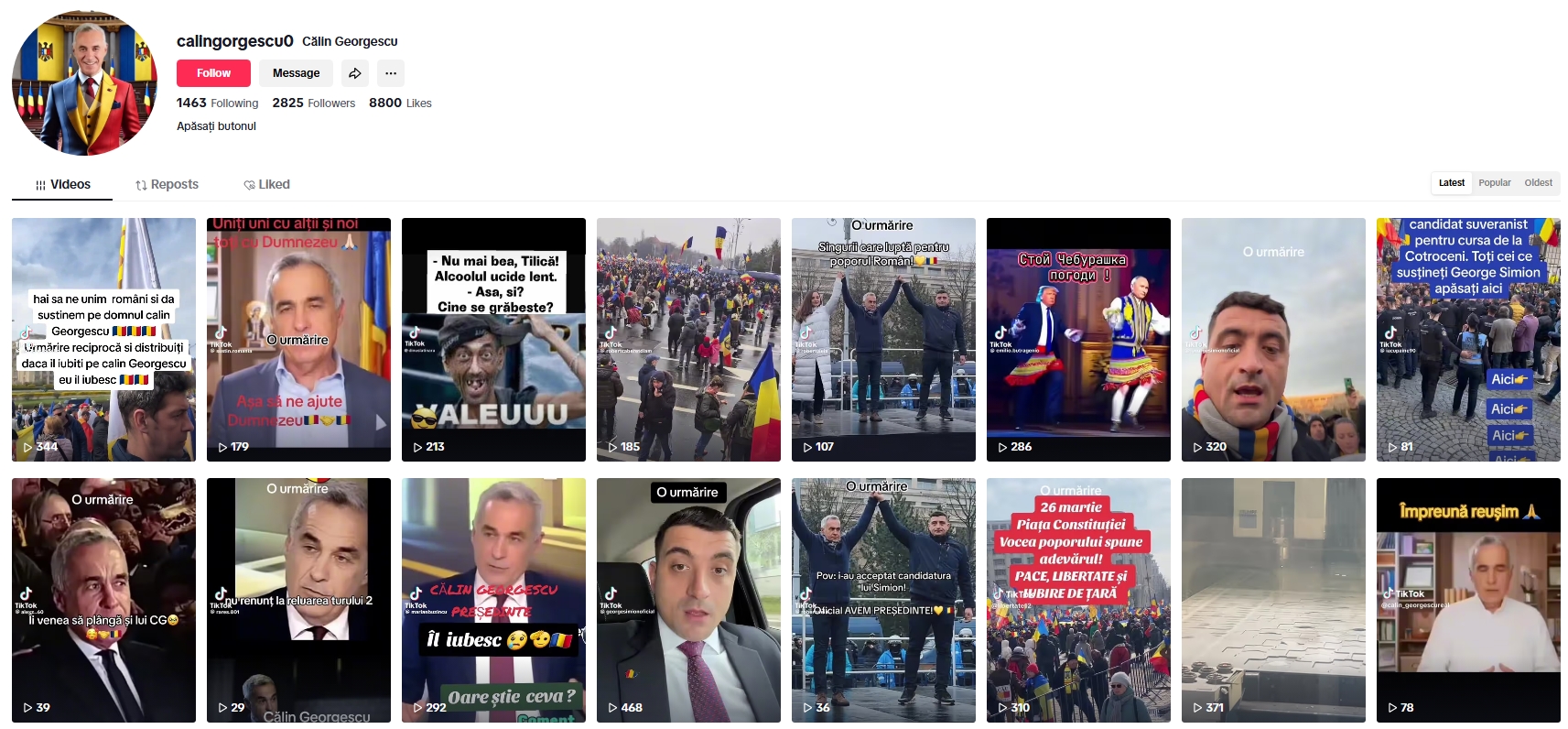

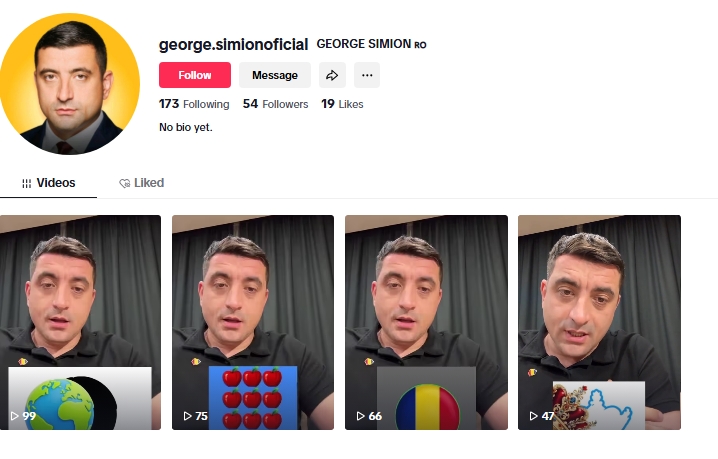

Below are selected examples of the murky accounts identified in our analysis. Note the key indicators of potentially inauthentic behaviour, including unusual follower-to-following ratios, repetitive content posting, and usernames deliberately designed to resemble official accounts. In terms of content strategy, as noted in previous investigations, murky accounts primarily focused on sharing news about the candidate or political party they were impersonating. They often included clips of them speaking at public events or debates (Image 4), as well as self-edited video using TikTok trends and native features (Image 2) and repeated content (Images 1 and 5).

Image 1 An example of a murky account identified on 7 April, impersonating the banned candidate C’0103lin Georgescu. Note the suspicious following/follower’s ratio, high engagement success, and repetitive output.

Image 2

A screenshot of a murky account impersonating candidate Elena Lasconi – an example of a murky account reliant on TikTok trends and native video editing features.

Image 3

A screenshot of a murky account impersonating the ultimate winning candidate, Nicu’0219or Dan.

Image 4

A screenshot of a murky account impersonating C’0103lin Georgescu

Image 5

A screenshot of a murky account impersonating candidate George Simion

Analysis of the Top Five Most Impersonated Political Entities

Through consistent weekly monitoring, we were able to gain a clearer understanding of the volume, dynamics, and engagement generated by murky accounts in the lead-up to the elections. In this section, we explore the five most impersonated entities in more detail.

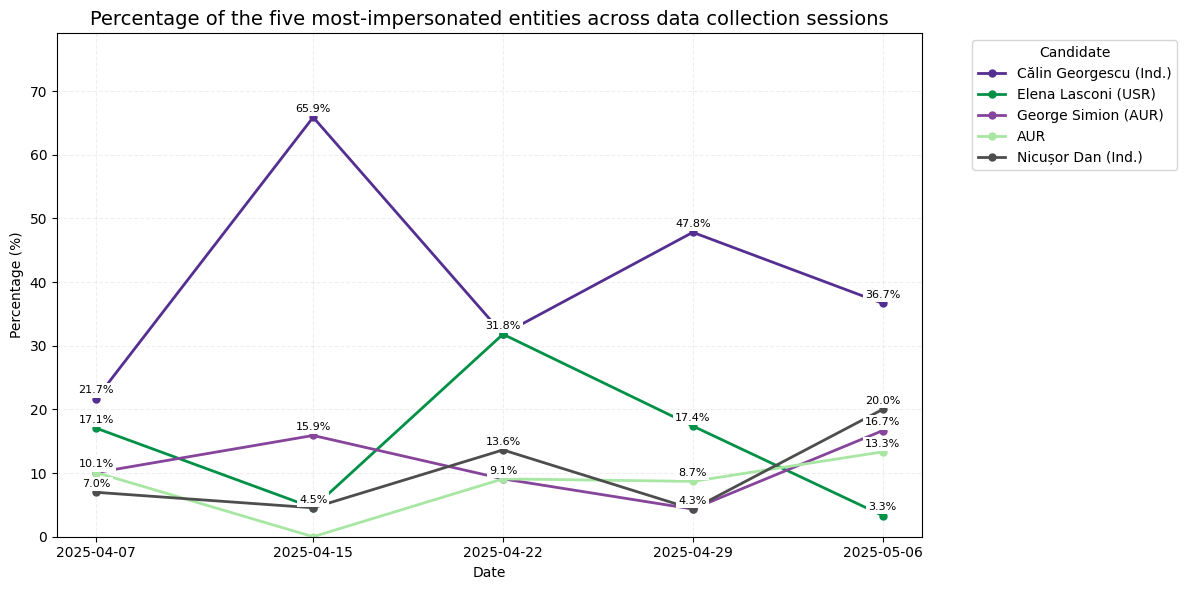

Murky account distribution across data collection sessions

Figure 3 shows the percentage of murky accounts associated with each political entity over the monitoring period, with the timeline on the x-axis reflecting our data collection dates.

Georgescu was the most impersonated candidate in almost every data collection session, representing between 21.7 per cent and 65.9 per cent of the sample, with the exception of 22 April, when he and Lasconi registered the same distribution. The highest spike occurred on 15 April, when these impersonations reached 65.9 per cent. His strong online presence could be interpreted as an unofficial extension of his 2024 campaign or as the long tail of the disproportionate amplification he received on TikTok. In either case, our data suggests a strong deliberate effort to keep him in the political conversation, despite the ban.

The impersonation of Lasconi surged in the week of 22 April (at 31.8 per cent of accounts identified in that session), but fell again afterwards. This may indicate targeted or reactive impersonation, possibly tied to specific campaign events or overall goals for increased visibility during that week. Accounts impersonating Simion, the AUR and Dan followed a low but persistent trend, ranging from 4.3 per cent to 20 per cent of our sample across sessions, suggesting some, if limited, activity in attempts to increase their online visibility and engagement via impersonation.

Figure 3.

Content production scale and engagement

We further analysed the scale and potential influence of murky accounts using four key metrics – the number of videos posted per account, the total received hearts, and the follower and following counts.

When examining posting activity, accounts impersonating Georgescu stood out as the most active, publishing an average of 48.7 videos each, more than double the output of most other impersonated figures. Accounts impersonating the winning candidate, Dan, followed with an average of 29.0 videos published by each. In contrast, accounts impersonating Simion, the AUR political party, and Lasconi were less prolific, publishing relatively lower levels of content, with averages ranging between 19.4 and 13.9 videos each.

Figure 4.

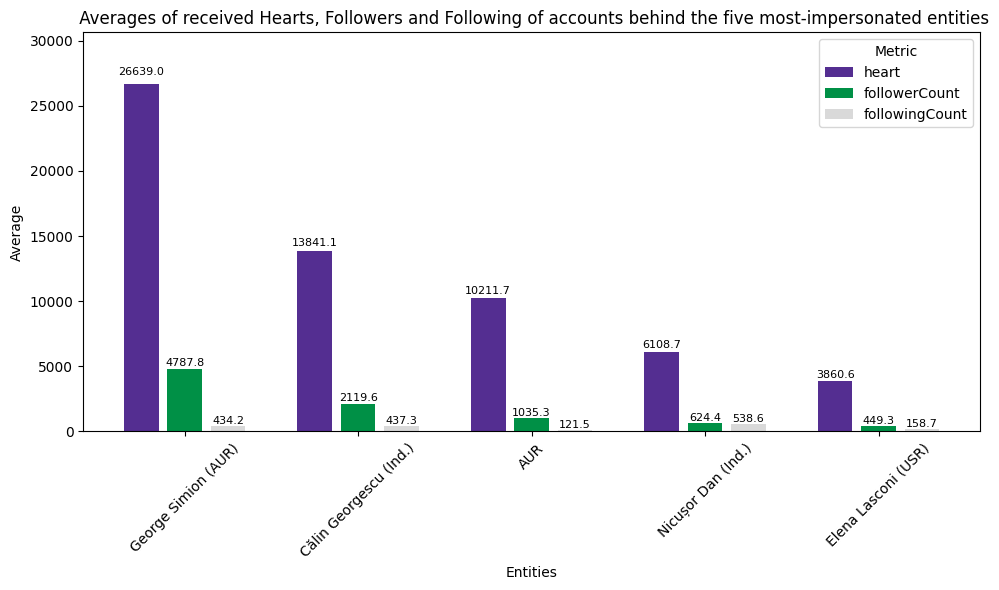

When looking at the engagement metrics, as shown in Figure 5, murky accounts impersonating AUR candidate Simion emerged as the most impactful. On average, accounts impersonating him accumulated 26, 639 hearts and 4, 787.8 followers, indicating a significant capacity to reach and influence audiences. This also aligns with the strong performance he achieved in the first round of the elections.

Figure 5.

Accounts impersonating Georgescu averaged 13, 841.1 hearts and 2, 119.6 followers, demonstrating that he remained influential in the online sphere, while those impersonating the AUR political party also showed notable reach, with an average of 10, 211.7 hearts and 1, 035.3 followers. Dan stood in the fourth place, with an average of 6, 108.7 hearts and 624.4 followers. While lower than the figures for Simion and Georgescu, this still points to a moderate level of engagement around his candidacy. Finally, Lasconi, despite a relatively large number of murky accounts, ranked fifth in terms of engagement. Accounts impersonating her averaged 3, 860.6 hearts and 449.3 followers per each account.

Notably, accounts linked to Simion garnered the most engagement on average – almost double that of Georgescu, and four times that of Dan. This high level of engagement was achieved with significantly fewer accounts producing fewer videos as compared to those for Georgescu, highlighting the potential disproportionate impact of a small group of murky accounts.

To complement the average engagement insights and better illustrate the impact generated by the murky accounts overall, we believe it is important to report some metrics in terms of absolute numbers. Murky accounts impersonating Georgescu received the highest total number of hearts, with 1, 176, 491, followed by those linked to Simion, with 745, 892. Accounts supporting the AUR, Lasconi, and Dan received less attention, totalling 214, 446, 138, 980, 128, 282 hearts, respectively.

Finally, it is important to note that the authenticity of the engagement above cannot be verified. Given that these accounts were identified as inauthentic, and thus likely part of influence operations, it is plausible that some of the engagement activity for them may have also been artificially generated.

Policy Recommendations

Given our consistent reporting and evidence gathering regarding the extent and scale of the issue of murky accounts, we continue to recommend that TikTok take stronger and significantly more proactive steps to safeguard political discourse and electoral integrity on the platform during elections. Failures on TikTok’s part to proactively address violations of its own terms of service – especially those threatening the integrity of electoral processes – are highlighted in this study by the large number of accounts impersonating Georgescu, the banned candidate. This underscores a lax approach to enforcement, even in cases where mass violations of terms have already been confirmed. Specifically, we urge the platform to:

- Implement design features to prevent the impersonation of political figures

- Mandate verified badges for all political accounts in the EU

- Conduct pre-election reviews to detect and address impersonation accounts effectively; and

- Carry out post-election clean-up efforts to remove lingering murky accounts, to prevent ongoing misinformation and protect future electoral integrity.

Methodology

This research project is the outcome of a standardised methodology for the identification of TikTok accounts that breach the platform’s impersonation policy – referred to in this study as murky accounts.

DRI, which has been investigating and reporting on these threats since last year’s European Parliament elections, defines a “murky” account as follows: A TikTok account of questionable affiliation that presents itself as an official government, politician, or party account (GPPPA) when, in fact, it is not. Murky accounts do not declare themselves as fan or parody pages, and can be interpreted as attempts to promote, amplify, and/or advertise political content.

To enable the detection of murky accounts, we began by compiling a comprehensive list of presidential candidates in Romania, along with their associated political parties, including both full names and abbreviations. This resulted in a total of 31 query terms. Drawing on prior research into the behaviour of impersonator accounts, we generated between two and five username variations for each entity, using common tactics such as the insertion of numbers, underscores (_), colons (:), and full stops (.) to mimic official profiles (examples of variations used are: “C’0103lin Georgescu.oficial.1.”, C’0103lin Georgescu123, AUR_oficial romania, Lavinia ‘0218andru presidente, Partidului Noua Românie real).

These variations were then used in weekly queries using TikTok’s search bar. Once data was collected based on these username variations, we conducted a manual check of all the accounts exhibiting indicators of impersonation or inauthentic behaviour, and classified them as “murky” based on our predefined criteria and TikTok’s impersonation policy.

As a result, 323 accounts were identified as impersonating political entities and were flagged as murky. We were then able to collect data from 248 profiles (as the remaining profiles were removed before we could complete data collection). The data points collected from these accounts include username, display name, bio, number of received hearts, and the number of followers and following.

Acknowledgements

This report was written by DRI Research Associate Francesca Giannaccini with contributions from DRI Research Coordinator Ognjan Denkovski. This paper is part of the access://democracy project funded by the Mercator Foundation. Its contents do not necessarily represent the position of the Mercator Foundation.