Executive summary

During May and July 2024, DRI identified 116 TikTok accounts with unclear affiliations and questionable authenticity actively distributing and promoting politician and party content ahead of the European Parliament (EP) elections on 6-9 June and the snap French elections on 30 June and 7 July. While TikTok removed some of these accounts, numerous others remain active due to an inconsistent interpretation of TikTok’s own policies.

The proliferation of impersonation accounts of political figures and ambiguous “fan accounts” on TikTok poses a systemic risk to civic discourse and electoral processes within the European Union. These accounts undermine TikTok’s service integrity by (i) misleading users, including voters; (ii) distorting perceptions of party and candidate support through their extensive reach (often millions of views); and (iii) providing a loophole to bypass TikTok’s more restrictive policy on government, politician, and party accounts.

We recommend that TikTok and other VLOPs:

- Update their policies to prevent the abuse of the fan account category.

- Implement design features to prevent impersonation and inauthentic fan accounts of political figures.

- Mandate verified badges for all political accounts in the EU.

- Conduct pre-election reviews to detect and address impersonation and inauthentic fan accounts effectively.

- Ensure consistent enforcement of guidelines and policies.

We also recommend the European Commission to Issue a request for information (Art. 67 DSA) to TikTok regarding the effectiveness of their current Community Guidelines and policies in preventing impersonation and inauthentic fan accounts of political figures.

A “murky” political game on TikTok

With 125 million reported active users in the EU, TikTok has become a pivotal arena for EU politicians and parties eager to win over young voters. In February POLITICO reported that MEPs looking for re-election were flocking in droves to the platform, with nearly one in three members of the EP parliament active on the app. Among all the political actors on TikTok, far-right parties and politicians appear to be the most active and successful. During the EP Elections campaign the far-right Identity and Democracy (ID) group stood out as the party with the highest proportion of their members (27 MEPs) present on the platform and the highest activity: nearly 3,000 posts uploaded by March 8.

Against this backdrop, DRI explored the political dynamics on TikTok in the run-up to the European elections. Our findings revealed that alongside the official engagement by parties and candidates on the platform there appears to be another phenomenon at play: a large number of accounts with unclear affiliations and questionable authenticity that are actively sharing and amplifying politician/party content.

Between May and July, we published four reports (see here, here, here and here) flagging 116 TikTok accounts from 31 candidates/political parties across 15 EU member states, representing nearly 3 million followers. We looked at accounts supporting parties from across the political spectrum. 79.31% of the flagged accounts supported far-right candidates and political parties. These accounts also dominated in terms of followership size, representing 91% of the total followers. Most other parties and candidates we reviewed only have one official account, posing no issue of authenticity or impersonation. In our reports, we use the term “murky political accounts” to describe accounts exhibiting some of the following public-facing characteristics:

- Using the name of a political party or candidate in their account name, sometimes with minor variations such as an extra letter, number, or symbol.

- Using the logo of a political party or the photo of a candidate, sometimes with slight variations.

- A lack of clarity about the account’s affiliation with the political party or candidate. It is not clear whether these accounts are official, paid by parties or fan-created. Even when affiliations are disclosed, the statements are frequently vague and confusing, appearing only in the description rather than in the account name or username.

- These accounts usually follow no one or only a few people but have a significant followership.

- They almost exclusively reshare or amplify party or candidate content.

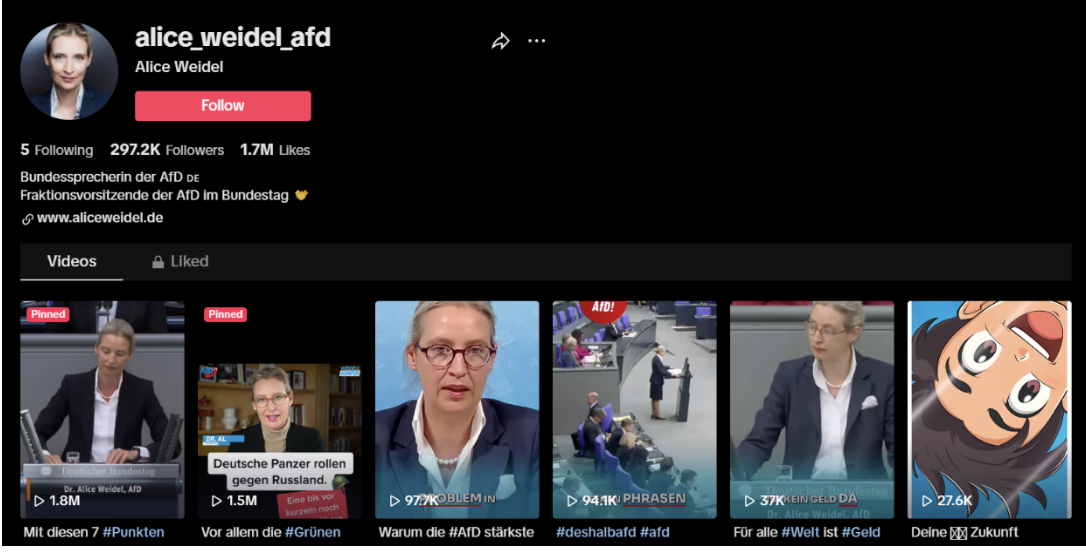

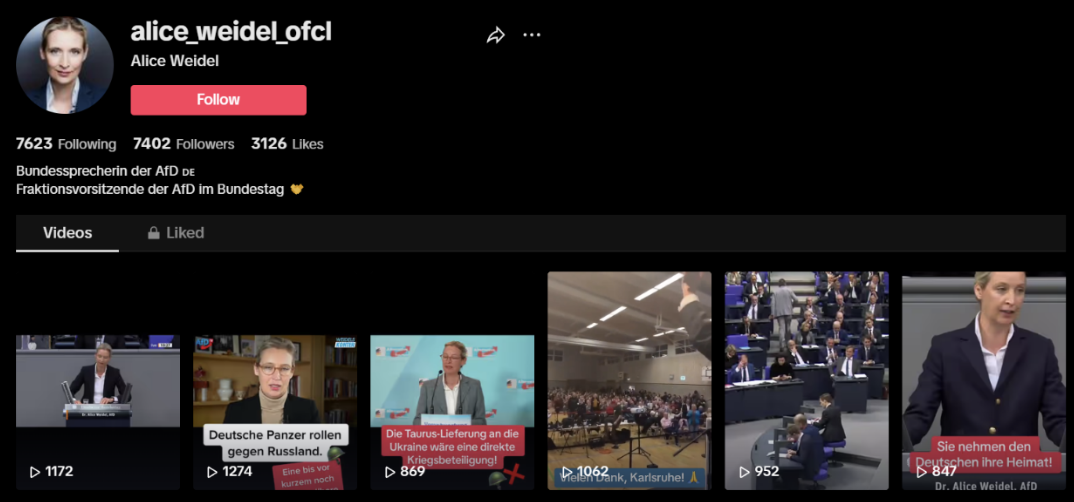

In some cases, the “murky” accounts category could overlap with impersonation, the practice of posing as another person or entity in an online platform by using someone else’s name and profile picture. However, it is worth noting that these “impersonations” seem to benefit the political candidate, so they are more likely a way to multiply the presence on the platform beyond the official account to artificially boost their presence. Images No. 1 and No. 2 show an example.

Image No. 1. Snapshot of the account@alice_weidel_afd (26 June 2024), presumably Mrs. Alice Weidel official account

Image No. 2. Snapshot of the account @alice_weidel_ofcl (26 June 2024), which looks remarkably similar to the official account (same photo, description, and videos). This account was removed after we shared this brief with TikTok ahead of publication.

Image No. 2. Snapshot of the account @alice_weidel_ofcl (26 June 2024), which looks remarkably similar to the official account (same photo, description, and videos). This account was removed after we shared this brief with TikTok ahead of publication.

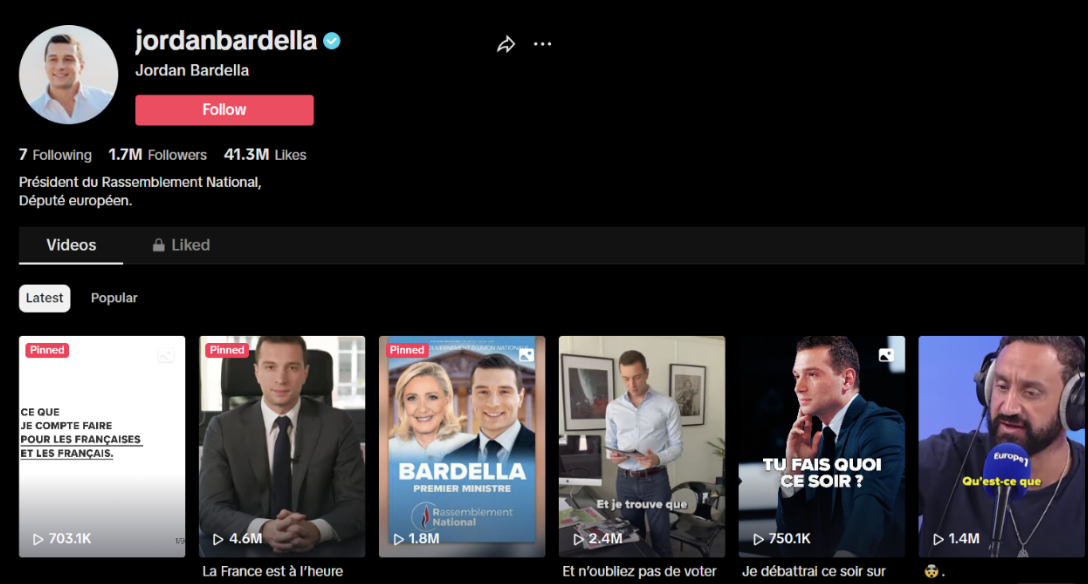

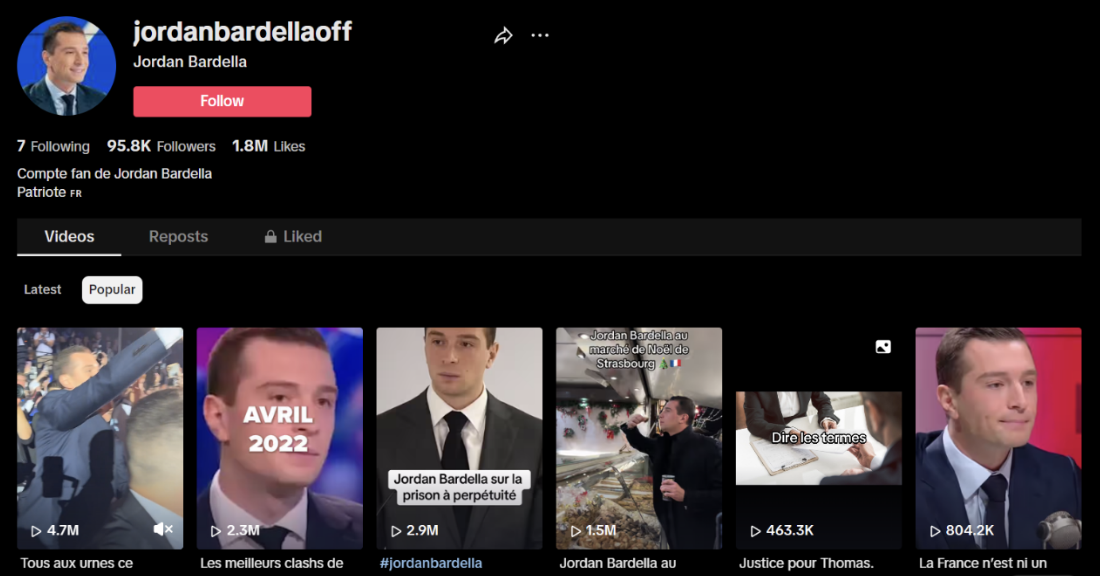

In other cases, even when accounts claim to be fan-based accounts, they often behave like pure duplication of party or candidate accounts. Images No. 3 and No. 4 illustrate this issue. Image No. 3 shows the official account of French politician Jordan Bardella, while Image No. 4 displays a “fan account”, as stated in its description. Despite this disclosure, the differences between the two accounts are minimal. The unofficial account uses the politician’s photo and name in both the handle and the account name, and even adds “Off” next to its name, potentially misleading viewers into thinking it’s the official account. Both accounts post very similar videos.

Image No. 3. Snapshot of the account @jordanbardella (26 June 2024), Jordan Bardella’s official account.

Image No. 4. Snapshot of the account @jordanbardellaoff (26 June 2024), fan-account of Jordan Bardella. This account was later removed by TikTok after we flagged it a second time.

Image No. 4. Snapshot of the account @jordanbardellaoff (26 June 2024), fan-account of Jordan Bardella. This account was later removed by TikTok after we flagged it a second time.

“Murky accounts” affect the integrity of TikTok’s service in three significant ways. On one hand, they wield disproportionate visibility and influence on the platform. Whether automated or not, when a large number of accounts exclusively share or reshare content from certain politicians or parties, they artificially boost that content’s visibility. This deceptive practice increases the likelihood of such content being promoted on the For You Feed, potentially distorting online perceptions of support for certain parties or candidates.

“Murky accounts” affect the integrity of TikTok’s service in three significant ways. On one hand, they wield disproportionate visibility and influence on the platform. Whether automated or not, when a large number of accounts exclusively share or reshare content from certain politicians or parties, they artificially boost that content’s visibility. This deceptive practice increases the likelihood of such content being promoted on the For You Feed, potentially distorting online perceptions of support for certain parties or candidates.

The proliferation of such accounts on the platform also undermines users’ ability to make informed decisions about the political content they engage with. Moreover, the striking similarities between the content posted by official accounts, murky accounts and nominal fan accounts raises the question to whom these accounts are linked. TikTok’s policies do little to address this lack of transparency. Although verified badges exist, they are mandatory for politicians and parties only in the US.

Furthermore, impersonation and “fan-based” accounts that only share politician/party content are an easy way to circumvent TikTok’s more restrictive policy on Government, politician and party accounts (GPPPA). Accounts designated as GPPPA lack access to several features, including incentive programs, creator monetization features, advertising options, campaign or election fundraising, and the Commercial Music Library (CML). But the category is meaningless if party or candidates accounts can be duplicated at will outside the category.

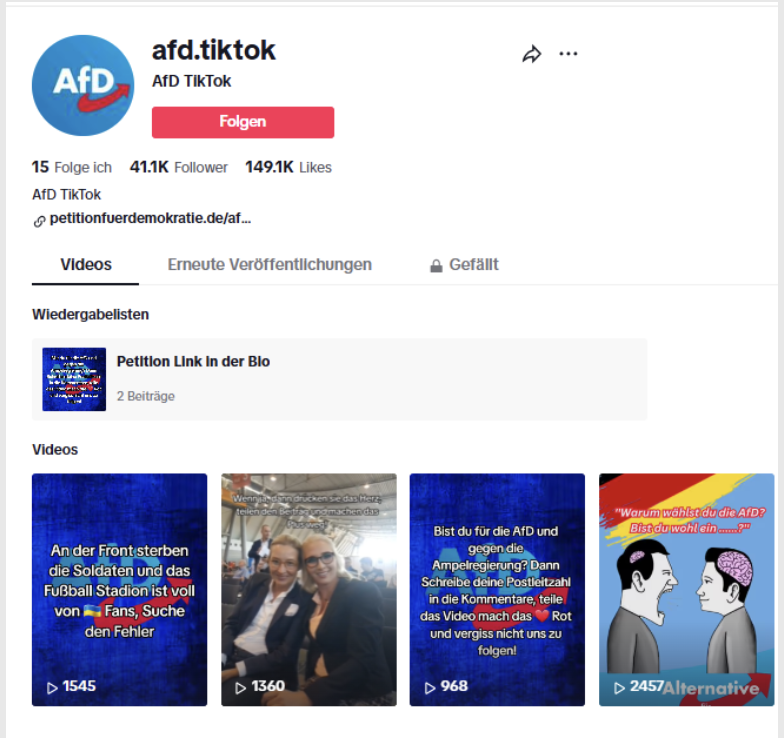

For all intents and purposes, “murky accounts” act like official politician or party accounts, using the same logos, names, and often sharing identical content. However, they evade enforcement due to TikTok’s inconsistent application of its policies. Simply adding a “fan account” label in the description allows them to skirt these rules, despite their integrity policy stating that the fan status needs to be reflected in the account name.

It is highly likely that many of these accounts are created by politician/parties and their teams. Researcher Kieran Murphy, who covered Germany for DRI’s EP Elections Social Media Monitoring Hub, analysed a Telegram channel between 9 March and 10 June where the AfD youth organisation Junge Alternative (JA) was coordinating their campaigns on TikTok. The channel, dubbed ‘TikTok Guerrilla’, explicitly called on members to engage in dishonest and manipulative practices in order to spread the party’s content as widely as possible. The Telegram group encouraged members to alter videos in ways that would bypass TikTok’s automatic moderation. Instructions included adding music to the clips, editing them to play in a different order, overlaying monologues with more generic scenes and, crucially for our case, creating many new accounts. Unsurprisingly, some of the content created and promoted by the Telegram group is being multiplied by “murky accounts” (e.g.@ maximilian.krah.afd). DRI will publish the case study on Germany shortly.

While we cannot be certain who is behind these accounts, TikTok’s lax policies create incentives for political actors to bypass GPPPA restrictions. Even more concerning, parties could use these accounts to spread hate speech or other illegal and harmful content without being held accountable, as they would be if done through official accounts.

VRT, the Flemish public broadcaster, investigated this phenomenon in Belgium in the weeks leading up to the EP Elections. It discovered numerous “sub-accounts” spreading propaganda for Vlaams Belang, a Belgian far-right party, often without clearly disclosing their affiliation. The investigation revealed that these accounts featured significantly more personal attacks on other politicians compared to the party’s official TikTok handle.

TikTok’s laxity stands out compared to other platforms

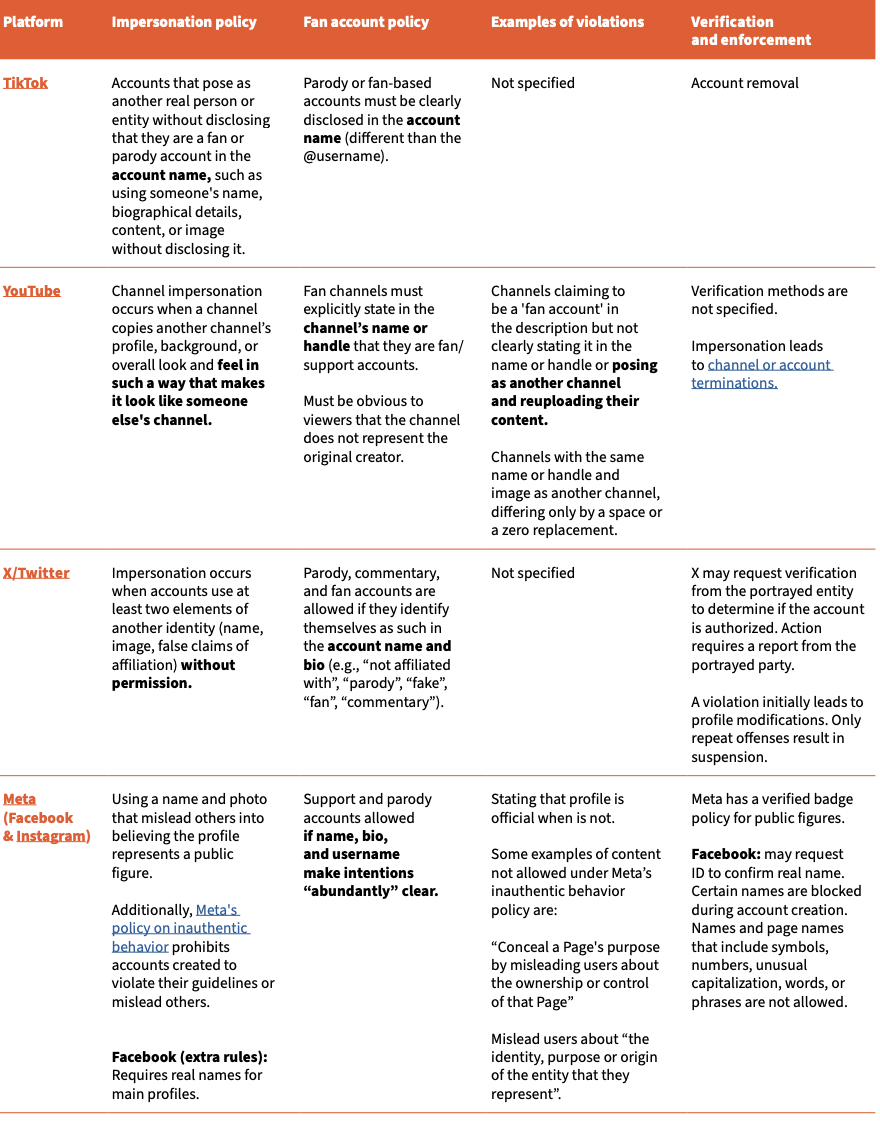

Our analysis of impersonation and fan account policies across five Very Large Online Platforms (VLOPs) —TikTok, YouTube, X, Instagram and Facebook, revealed that TikTok and X are not only lax, but they make the circumvention of rules particularly easy (See Table No. 1).

While all platforms prohibit impersonation and make an exception for fan accounts within that category, they vary in their definitions of what qualifies as a fan account—some adopting more restrictive interpretations than others.

Meta and YouTube have stringent requirements for fan accounts, requiring them to clearly indicate their unofficial status through “obvious” or “abundant” means. Meta insists on disclosure on the account’s name, handle, AND bio, while YouTube only requires it in either the account’s name OR handle. YouTube also gives specific examples of violations, like accounts that claim to be fan accounts but act like official ones or use names differing from official ones by just a zero or a space. These two last examples read similar to our “murky accounts” definition.

Table No. 1. VLOPs policies on impersonation and fan account

*Access the social media policies here: TikTok, YouTube, X/Twitter, Meta, and Instagram.

Facebook implements design features to prevent impersonation. It blocks specific names (likely including those of well-known figures such as politicians and parties) and prohibits symbols, numbers, unusual capitalization, and certain words or phrases in page or profile names. Nonetheless, enforcement has also been a challenge for the platform, as shown by the “cryptom scams” scandal where Belgium news media organisations and politicians were impersonated to facilitate fraud.

In contrast, TikTok and X employ lax policies. X has a questionable approach where action against impersonation accounts depends on whether the impersonated person or entity has authorised it. In other words, parties or candidates can create numerous accounts or let others impersonate them, creating systemic risks of inauthenticity and artificial boosting engagement.

TikTok requires fan accounts to disclose their status in the account name, but our experience indicates that the platform’s enforcement of its Community Guidelines is extremely lax. Out of 116 accounts reported by DRI, the company acted on 51 of them (removing them). Many of the accounts we reported had no indication that they were fan accounts and yet, at the time of writing, are still online.

Image No. 5. Snapshot of the account @afd.tiktok (8 July 2024)

Other accounts like the above-mentioned @jordanbardellaoff, did not disclose their fan status in the account name thus violating explicit platform policy. Yet, we had to report it twice for it to be removed by the platform.

TikTok’s response

DRI provided TikTok with a copy of this brief ahead of publication. The company did not dispute our findings but reiterated their policies on impersonation and verification of political accounts. It stated: “We rigorously protect our platform’s integrity by prohibiting impersonation, helping political accounts apply for verified badges, and partnering with experts to seek feedback on our approach and launch media literacy initiatives that have garnered hundreds of millions of views across Europe”. TikTok also mentioned that some of the accounts DRI reported were already under review.

TikTok’s policies and enforcement are not enough to comply with DSA obligations

According to Article 34 Very Large Online Platforms (VLOPS) are obliged to carry out risk assessments of their platforms to identify “any actual or foreseeable negative effects on civic discourse and electoral processes”. Such “assessments shall also analyse whether and how the risks (…) are influenced by intentional manipulation of their service, including by inauthentic use or automated exploitation of the service.”

According to Article 35 companies must mitigate systemic risks, with possible measures including: (a) adapting the design, features or functioning of their services, including their online interfaces and (b) adapting their terms and conditions and their enforcement.

The European Commission’s “Guidelines for providers of Very Large Online Platforms and Very Large Online Search Engines on the mitigation of systemic risks for electoral processes”, adopted under the DSA, identifies impersonation of candidates as one of the risks to elections and stresses the need for platforms to enforce rules against inauthentic accounts, whether created manually or automatically, and against problems like fake engagements, non-transparent promotion of influencers and coordination of inauthentic content creation and behaviour.

We believe that the proliferation of multiple accounts for the same political parties, figures, including candidates, as well as “fan accounts” with ambiguous affiliations that exclusively amplify politicians’ or party content, poses a systemic risk to civic discourse and electoral processes in the European Union. As mentioned earlier, this proliferation undermines the integrity of TikTok’s service because it misleads voters and other users monitoring online discourse around elections, distorts perceptions of online support for specific parties or candidates as these accounts garner massive viewership (millions), and provides an easy avenue to circumvent TikTok’s stricter policies regarding government, politician, and party accounts.

We also find that TikTok is failing to mitigate the systemic risks posed by “murky accounts” for several reasons:

- Lack of proactive risk mitigation: Our reports relied on spot-check analysis using manual methods that are accessible to any user. Even with this basic method, we easily found numerous impersonation accounts of high-profile politicians, such as party leaders and national candidates. This suggests that TikTok’s efforts to prevent this phenomenon in the run-up to an election such as the European Parliament elections were insufficient. As mentioned, other platforms make special efforts to ensure integrity of the accounts of politicians. In four successive reports we called on the platform to proactively identify such accounts and to apply its community guidelines to them.

- Lack of enforcement of own guidelines: TikTok’s enforcement of its own policy is inconsistent. While the platform states that accounts must disclose their fan status in the account name, it has not consistently removed accounts that only disclose this status in the description. This lax enforcement makes it easy to circumvent TikTok’s policies and creates incentives for parties to maintain “second official” accounts where they can share harmful content undetected.

- Community Guidelines are not fit for purpose: Even if TikTok applied its community guidelines consistently, they are not sufficient to mitigate risks to civic discourse and electoral integrity. In contrast to other platforms TikTok relies on a very weak signal to consider an account to be authentic. A user simply must add the word “fan” to the account name. This is an invitation to political parties or candidates who want to artificially boost their presence on the platform. As we have shown above, other platforms use a richer set of signals to avoid circumvention of rules and to promote authenticity.

- TikTok does not apply its own best practice in the EU: Verified badges for political and party accounts are optional in the EU but mandatory in the US. The platform is thus not using its best efforts to full-fill its obligations under the DSA.

Our recommendations

To TikTok (and other VLOPs)

- Update Community Guidelines and policies to prevent the abuse of the fan account category. In particular, the mere mention of the word “fan” should not be enough to circumvent policies on impersonation, labelling of political parties and candidate accounts and general rules of authenticity. Fan accounts should not be forbidden, they are of course covered by freedom of expression. But VLOPS should assess carefully whether they are authentic fan accounts, rather than attempts by parties or candidates to circumvent rules. Measures to mitigate the risk of the abuse of the fan account category should be “reasonable, proportionate and effective”, as established by Art. 35 DSA. For example, fan accounts should show clear signs of community engagement (i.e. follow other sites, engage with other users), beyond resharing party/politician content. VLOPs should also assesses signals that are not visible to users, such as account registration data, fake engagements, automated spam, or coordination of inauthentic content creation or behaviour.

- Implement design features to deter the duplication of accounts, impersonation or inauthentic fan accounts, such as block the use of party or politician names.

- Make verified badges mandatory for all political accounts across the EU. VLOPs should provide verified political party accounts of a certain size (measured in parliamentary representation and/or opinion poll results) with a checkmark and do not allow further use of the images and names by other accounts.

- Prior to every election, conduct a systematic review to detect and address impersonation or inauthentic fan accounts effectively.

- Be consistent with the interpretation and enforcement of their own Community Guidelines and Policies.

To the European Commission

- Issue a request for information (Art. 67 DSA) to TikTok regarding the effectiveness of their current Community Guidelines and policies in preventing impersonation and inauthentic fan accounts of political figures.

This brief was written by Daniela Alvarado Rincón and Michael Meyer-Resende, and it was made possible thanks to the financial support of the Culture of Solidarity Fund powered by the European Cultural Foundation (ECF) in collaboration with Allianz Foundation and the Evens Foundation. Its contents do not necessarily represent the position of the donors.