Executive Summary

The EU’s Digital Services Act (DSA) has created high expectations both in the EU and globally. As enforcement ramps up, attention is turning to whether the Act can meet those expectations. The German snap elections of February 2025 provided a key test, amid concerns of potential foreign influence and the misuse of online platforms, and drawing lessons from previous elections, such as Romania’s presidential election in November 2024. Issues like the misuse of AI, disinformation, political propaganda, biased algorithms, and irregularities in paid political ads all came to the forefront in the German vote.

This brief maps actions taken by key actors to protect online integrity during the German elections:

- The European Commission. As the primary enforcer of the DSA, it indirectly oversaw the election, through ongoing cases, soft law initiatives, and co-regulatory tools, such as the Code of Conduct on Disinformation and the Rapid Response System.

- Germany’s Digital Services Coordinator (DSC) facilitated roundtables and stress tests with relevant stakeholders, supported data access, and established incident response channels.

- The European Board for Digital Services published a DSC Toolkit for elections and is responsible for fostering cross-border cooperation and joint investigations by DSCs. The Board will soon release an annual report on best practices for mitigating systemic risks and advises the Commission on when to activate the crisis mechanism.

- National administrative and judicial authorities played a critical role, particularly in incident response, determining the justiciability of the DSA, and establishing potential benchmarks for when an election may be considered compromised on the grounds of online integrity.

- Executive actors are powerful drivers of media attention, as they can play a crucial role in elevating issues, thus placing them on the political agenda.

This analysis highlights advocacy opportunities, gaps, and challenges for civil society organisations (CSOs) and other stakeholders when engaging with the regulatory landscape and protecting online integrity during elections.

Germany’s Snap Elections:

A Test Case for the DSA and EU Digital Regulations

Although polls leading up to Germany’s snap elections on 23 February did not suggest major swings in voter support (and the polls were confirmed by the results), the campaign still drew intense national and international scrutiny.

One key reason for this was the elections’ broader geopolitical significance – widely seen as an indicator of how Germany, and by extension the EU, would position itself in relation to the new administration of President Donald Trump in the United States.

The United States loomed large in the background, because an administration official, Elon Musk, used his X platform to promote the AfD party, which the German security agencies have designated as “in parts rights-wing extremist”. Musk published posts on X and an OpEd in the German daily Die Welt in favour of the party, and interviewed its leader, Alice Weidel, in a supportive conversation streamed on his platform.1 This open support by one foreign media owner and presidential administration official marked unprecedented foreign meddling in a democratic process.

At the same time, Meta suddenly accused fact-checkers, with which it had co-operated for many years (while itself making any decision on deletions) of censorship, and terminated fact-checking programmes in the United States.2 Meanwhile, high-level US officials ramped up pressure against key EU digital regulations – the DSA, DMA, and AI Act – arguing that they posed a threat to US business interests and US notions of free speech.3

Given this backdrop, Germany’s elections became a critical test case for the EU Digital Regulatory Framework, particularly the DSA. Following the controversial annulment of Romania’s presidential election due to allegations of foreign interference on TikTok, the ability of the DSA to safeguard a fair and transparent online debate was under intense scrutiny.

In this brief, we reconstruct the mechanisms through which different authorities worked to safeguard the online integrity of the German elections, mainly under the DSA framework. We aim here to support other organisations working at the member-state level in advocating for stronger protections during elections. We also highlight key challenges and gaps – ultimately addressing a fundamental question: Is the DSA enough to tackle the online platform-related risks to elections?

The “Menu” Available in the DSA – and Beyond – to Protect Online Integrity During Elections

We mapped actions taken by key authorities during Germany’s snap elections, including by: (i) the European Commission; (ii) Germany’s DSC; (iii) the European Board for Digital Services; (iv) other national authorities; and (v) political authorities. Our analysis highlights potential advocacy avenues provided by these authorities, along with their respective gaps and challenges.

-

The European Commission – DG Connect

1.1. Indirect enforcement through ongoing open cases against VLOPs and VLOSEs

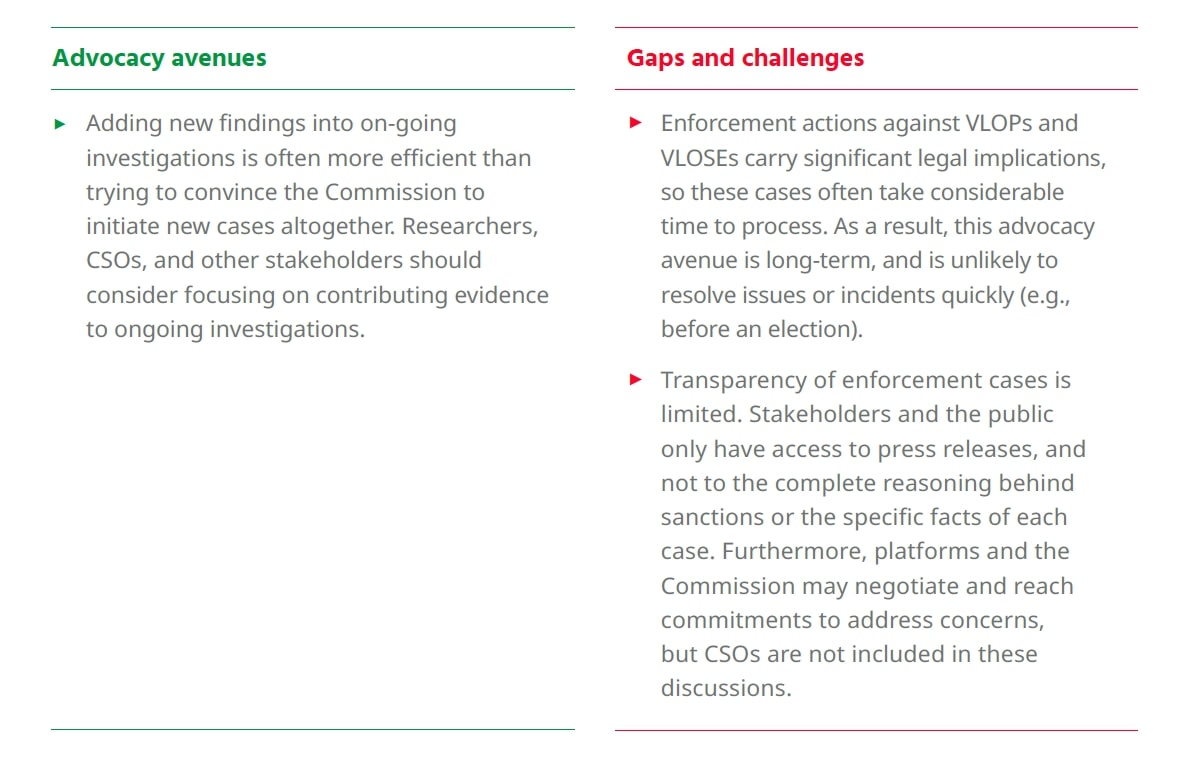

Although the Commission did not open new DSA enforcement cases specifically related to the German elections, it leveraged existing cases to monitor the efforts by very large online platforms (VLOPs) and very large online search engines (VLOSEs) to mitigate online risks to election integrity.

For example, amid claims that Musk might also support the AfD by changing algorithmic amplification of the party’s content on the platform, the Commission expanded its ongoing investigation into X’s compliance with the DSA.4 On 17 January, the Commission took a number of steps, including: (i) requesting internal documentation regarding the platform’s recommender system and any recent changes; (ii) issuing a retention order for X to preserve documents related to future algorithm changes between 17 January and 31 December 2025; and (iii) seeking access to X’s API, to examine the enforcement of content moderation and the virality of accounts.5

Similarly, last year, the Commission opened a case against TikTok for potentially failing to meet its obligations to identify and mitigate systemic risks to election integrity.6 Although the case is linked to TikTok’s role in the Romanian elections, the Commission’s press release made clear that the investigation would “focus on the platform’s management of risks to elections and civic discourse” – in particular, related to its recommender systems and political advertising policies. This suggests that the investigation has implications for all EU elections.

Further, the Commission’s retention order to TikTok, requiring the platform to “freeze and preserve data related to actual or foreseeable systemic risks to electoral processes and civic discourse within the EU” from 24 November 2024 to 31 March 2025, explicitly covers data related to the German elections.

Facebook and Instagram are also under investigation for the proliferation of deceptive advertisements, disinformation campaigns, and coordinated inauthentic behaviour on their platforms, particularly as these issues pose risks to civic discourse, electoral processes, and fundamental rights.7 The investigation is not linked to a specific case, so we can assume it applies to all EU elections.

1.2. Enforcement through soft-law instruments: Guidelines on electoral integrity

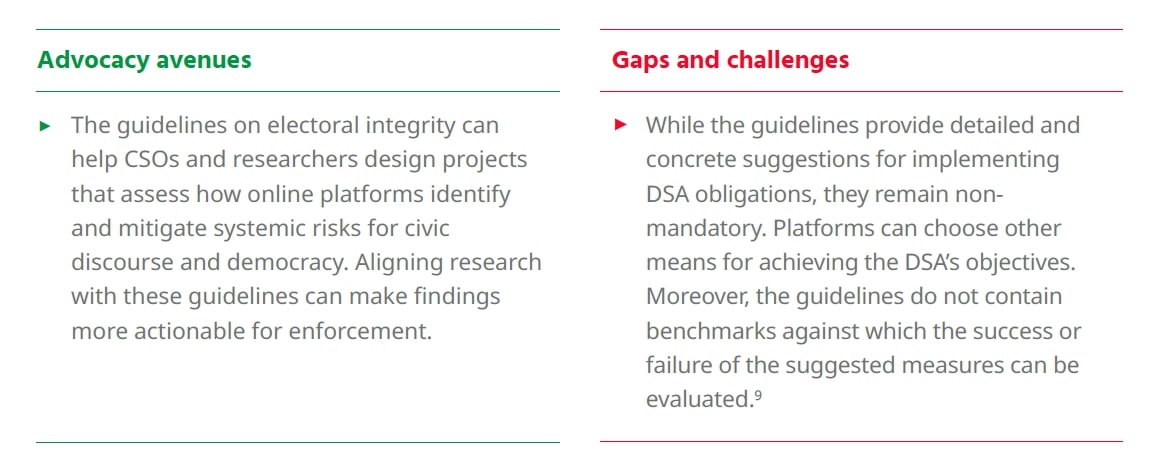

In the context of the 2024 European Parliament elections, the Commission issued guidelines on online electoral integrity, outlining measures for VLOPs and VLOSEs to address many of the systemic risks related to elections.8 These guidelines were designed as a broader framework applicable to all EU elections. By translating the general provisions of Articles 34 and 35 into more concrete terms, the guidelines provide a crucial roadmap for monitoring platforms’ compliance with the DSA.

Moreover, the guidelines address risks not covered by existing regulations, or those that are not yet in force. For example, they suggest measures to tackle issues such as transparency of political advertising and the mitigation of generative AI risks. These topics are also addressed by the political advertising and the AI Act, but those regulations are still not fully in force. By including these measures in a DSA enforcement document, the Commission provided a mechanism for addressing risks that remain unregulated.

In other areas, the guidelines go beyond the DSA. For example, they introduce measures on data access and third-party scrutiny, encouraging VLOPs and VLOSEs to exceed the requirements of Article 40. These measures promote ad-hoc cooperation, including the development of tailored tools, features, visual dashboards, additional data points to existing APIs, and providing access to specific databases.

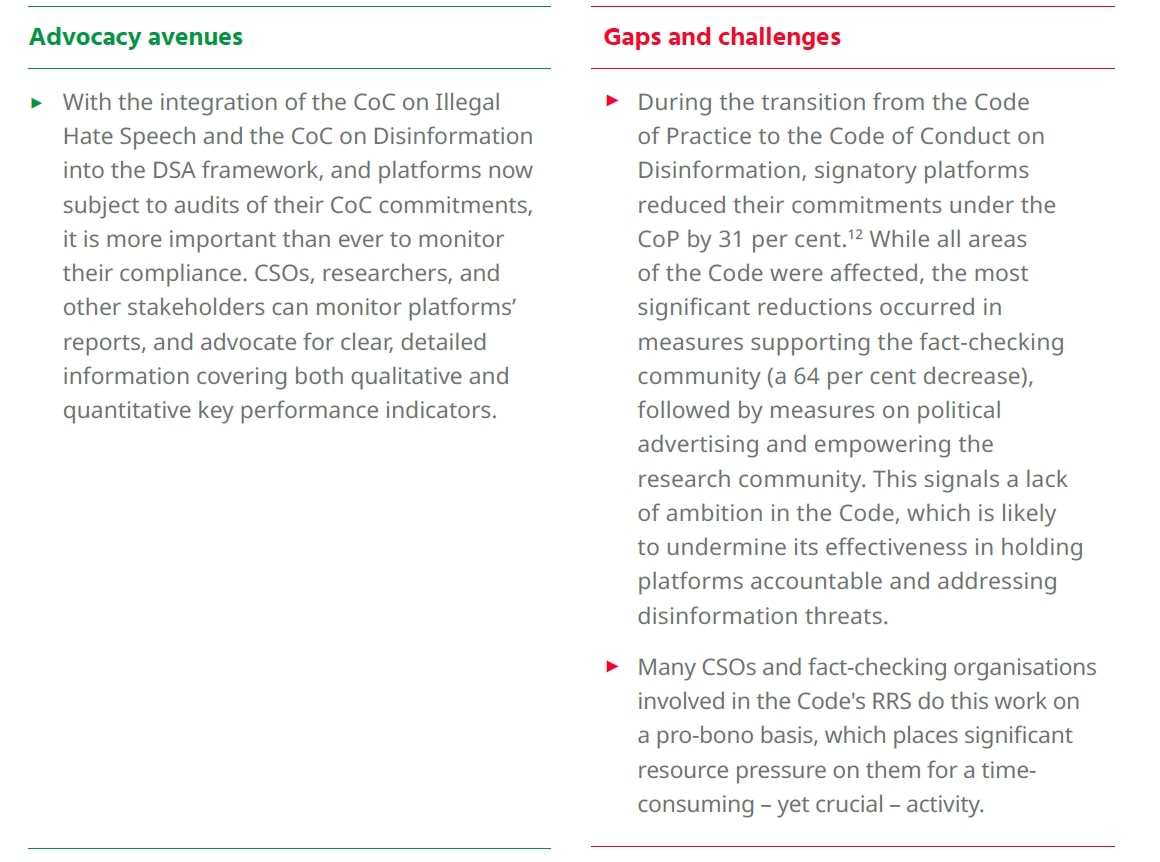

1.3. Enforcement through co-regulatory instruments: Codes of conduct on illegal hate speech and disinformation

In the weeks leading up to the German elections, the Commission finalised the integration of the Code of Conduct on Countering Illegal Hate Speech Online+ (CoC on Illegal Hate Speech) and the Code of Practice on Disinformation (CoC on Disinformation) into the DSA framework.10 This decision, long anticipated for the DSA, carries two significant implications. First, platform commitments under these codes will now be subject to annual independent audits (Article 37[1][b] DSA). Second, compliance with these codes is explicitly recognised as a valid risk mitigation measure (Article 35[1][h]), meaning refusal by a platform to participate without valid justification could be considered as an element in determining whether there has been a breach of their obligations under the DSA (Recital 104).

While the CoC on Illegal Hate Speech applies solely to online platforms, the CoC on Disinformation also includes other key stakeholders, such as the online advertising industry, ad-tech companies, fact-checkers, and CSOs. This broader inclusion has created an important space for platforms to engage and collaborate with these diverse stakeholders.

A key aspect of this collaboration is the Rapid Response System (RRS), outlined in Commitment 37 of the CoC on Disinformation, which aims to strengthen coordination and collaboration among signatories during crises or elections. Due to its importance, in its opinion on the Code, the Commission stressed that the implementation of the RRS “will be thoroughly scrutinised” (p. 9).11 The Commission also highlighted that the RRS should cover all national elections (presidential and parliamentary) in member states and, where possible, extend to regional and local elections, as well as referendums, within the EU. Moreover, the Commission stressed that, for cooperation to be meaningful, the RRS should be activated well in advance, cover all relevant languages, include swift reviews of the information received, provide detailed feedback, and ensure necessary follow-up actions are taken promptly, adapting to the time-sensitive nature of the electoral context.

-

Germany’s Digital Services Coordinator

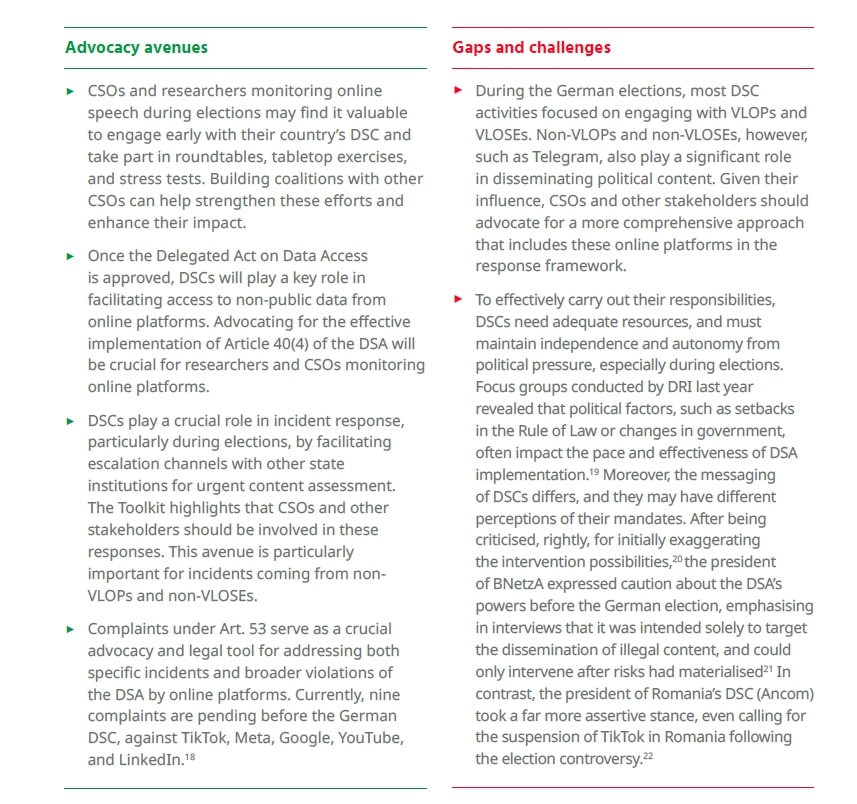

DSCs are the single points of contact for all matters related to the DSA in EU member states. As such, Germany’s DSC, the Federal Network Agency (BNetzA), played an active role during the German snap elections. On 24 January, BNetzA hosted a roundtable with representatives from Google, LinkedIn, Meta, Microsoft, Snapchat, TikTok, X, national authorities, and CSOs to discuss election-related trends and risk mitigation strategies by VLOPs and VLOSEs.13

A week later, on 31 January, BNetzA and the European Commission conducted a stress test with the same platforms, where authorities presented various fictitious scenarios to assess the platforms’ ability to respond swiftly to potential breaches.14 These scenarios included information manipulation via deepfakes, coordinated inauthentic behaviour, incitement to online violence, and the suppression of online voices through harassment.15

Roundtables and stress tests are among the tools recommended in the Toolkit for DSCs to enhance election preparedness.16 The Toolkit encourages DSCs to establish early connections with stakeholders, VLOPs, and VLOSEs to share knowledge and resources. DSCs should also facilitate the publication of voter information, provide DSA-specific guidance for candidates, and promote media literacy campaigns. Crucially, DSCs are advised to support research and data sharing, monitor political advertising and ad libraries, and share lessons learned in post-election reports.

DSCs also have a role in incident response. The Toolkit advises DSCs to create incident protocols, establish networks, and ensure key escalation channels are in place to respond swiftly to complaints. Such escalation channels should involve all relevant stakeholders, including “CSOs, academic institutions, state institutions and bodies that can be mobilised in the case of elections for monitoring and knowledge-sharing purposes”.17 Moreover, under Article 53 of the DSA, DSCs are responsible for handling user complaints about online platforms’ non-compliance with the DSA. This effectively creates another avenue for addressing incidents.

Incident response by DSCs is particularly important for holding non-VLOPs and non-VLOSEs accountable. While such platforms are not subject to due diligence obligations, they must still comply with several key DSA requirements, such as notice-and-action mechanisms, complaint and redress procedures, and recommender system transparency. As smaller platforms are not part of codes of conduct, DSC coordination of incident response for these platforms becomes even more essential.

DSCs are advised to start these activities from one to six months before the election campaign period, and to continue for at least one month after the elections. In the event of snap elections, such as in Germany in this case, the Toolkit recommends that DSCs prioritise activities strategically, by focusing, for example, on engaging with stakeholders and setting up response systems.

-

The European Board of Digital Services (EBDS)

As an independent advisory group, the EBDS supports DSCs and the European Commission in overseeing VLOPs and VLOSEs by, among other activities: i) facilitating cross-border cooperation and joint investigations by DSCs; ii) publishing an annual report outlining best practices for mitigating systemic risks (Art. 35.2); and iii) advising the Commission on when to activate the crisis mechanism (Art. 36.1).23

To our knowledge, the EBDS has not yet facilitated any cross-border investigations. We anticipate the publication of EBDS’ annual report on systemic risks later this year, which will come amid warnings from multiple actors, including the DSA Civil Society Coordination Group, that recent VLOPs and VLOSEs Risk Assessment Reports “fail to adequately assess and address the actual harms and foreseeable negative effects of platform functioning”.24

-

National judicial and administrative authorities

While not directly responsible for enforcing the DSA, national security agencies play a vital role in protecting election integrity online, particularly against foreign interference. During the German elections, the country’s domestic intelligence service (BfV) established a task force to increase cooperation with national and international partners and to counter foreign influence operations.26 Meanwhile, the Federal Ministry of Internal Affairs Ministry of the Interior launched the Central Office for the Detection of Foreign Information Manipulation (ZEAM).27 This initiative, including also the Federal Foreign Office, the Ministry of Justice, and the Press and Information Office, focused on identifying and addressing foreign disinformation and other hybrid threats. The Federal Office for Information Security (BSI) oversaw cybersecurity measures.

Beyond security agencies, electoral authorities are central to ensuring information about the electoral process is trustworthy. In Germany, the Federal Returning Officer, responsible for overseeing elections, actively worked to identify and correct misinformation.28

Political financing institutions also play a key role when campaign financing intersects with digital political advertising. During the German election campaign, some organisations29 and political figures, including then-candidate Friedrich Merz, 30 argued that Musk’s support for AfD on X could constitute an illegal party donation. Under the Political Parties Act, election advertising by third parties is considered a party donation, and donations from non-EU countries are prohibited. How this rule applies in the digital context remains, nonetheless, uncertain.

Judicial authorities are another crucial piece of the puzzle. As enforcement of the DSA evolves, litigation is likely to become a crucial tool for rightsholders – including users and researchers – to clarify and strengthen its provisions. In January, DRI, with support by its legal partner, Gesellschaft für Freiheitsrechte (GFF), filed the first-ever lawsuit against X under Article 40(12) of the DSA.31 The case, which is still ongoing, seeks access to publicly available data to identify systemic online risks to the German snap elections.

Judicial authorities may also have the power of an extreme measure – the annulment of elections. The 2024 Romanian presidential election serves as the most significant recent example. Romania’s Constitutional Court annulled the election, citing irregularities in the campaign, including foreign interference through TikTok and irregular online paid political ads, and alleging that these breaches “distorted the free and fair nature of the vote, compromised electoral transparency, and disregarded legal provisions on campaign financing”.32 The Court found that the disproportionate online exposure of one candidate affected the fundamental right to run for office, significantly distorting the election process. The case has, nonetheless, generated serious controversy.

All other administrative authorities in a country have competencies under Art. 9 and Art. 10 of the DSA to alert VLOPs and VLOSEs about illegal content online.

-

European and national executive authorities

The German elections attracted significant scrutiny from political/executive authorities. In Germany, the Bundestag’s Digital Committee invited representatives from X, Meta, and TikTok to discuss the upcoming elections, but the platforms declined, arguing they were given too short notice.33 On 22 January, German Minister of Internal Affairs Nancy Faeser met with major tech platforms to discuss measures against targeted disinformation campaigns, including those aimed at the election process or candidates, and hate crimes. 34 The Ministry held similar meetings with other stakeholders, including CSOs.

At the EU level, on 30 January, 12 member-states urged the European Commission to use its powers under the DSA due to “disruptive interventions in public debates during key electoral events” that, according to the letter, represented a direct challenge to EU’s stability and sovereignty.35

Concerns about online integrity of the German elections also reached the European Parliament. On 4 January, German MEP Damian Boeselager’202fwrote’202fto EU Executive Vice President’202fHenna Virkkunen, ‘202fquestioning whether Musk’s use of the platform X met the transparency requirements of the DSA. Commissioner Virkkunen replied, insisting that the EC “is determined to advance with the case expeditiously and, while respecting due process, adopt a decision closing the proceedings as early as legally possible.”36 Other lawmakers posted similar concerns in their own social media channels. Eventually, on 21 January, the European Parliament held a debate on the need to enforce the DSA and protect democracy “against foreign interference and biased algorithms”.37

So, Can the DSA Safeguard Election Integrity?

As the first regulation of its kind addressing online platforms – extending beyond illegal content to include due diligence obligations and requiring measures to tackle legal but harmful content and platform design risks – the DSA has set high expectations.

These expectations have, however, been met with growing skepticism, following the release of the first Risk Assessment Reports from VLOPs and VLOSEs – arguably the DSA’s most significant innovation.

The reports have disappointed, including in their assessment of systemic risks to civic discourse and elections. Key concerns include inconsistencies across the reports, a lack of meaningful data or analysis on the effectiveness of mitigation measures, minimal transparency about teams handling civic discourse and electoral risks, little to no information on platform design risks, and, crucially, the absence of stakeholder consultation.38 Additionally, the reports tend to focus narrowly on elections, rather than on fostering a broader, healthier political discourse online.

But the DSA does not stand alone. It is part of a broader institutional framework designed to guarantee democratic resilience against both online and offline threats. This brief only highlights the critical role that the DSA and national institutions play in safeguarding online integrity. Many other regulations, such as the European Media Freedom Act, the Political Advertising Regulation, the Digital Markets Act, and the AI Act, also contribute to this end.

The European Union acknowledges this broader understanding of democratic resilience through, for example, the proposed European Democracy Shield, a plan included in Commissioner for Democracy, Justice, the Rule of Law and Consumer Protection Michael McGrath’s mission letter and the Commission’s 2025 work programme.39 The Shield aims to address major threats to democracy in the EU, such as rising extremism and disinformation, though with a strong focus on foreign interference.

For CSOs, researchers, and other stakeholders working to create safer online spaces during elections, engaging with the broader regulatory landscape is essential. While advocating across multiple areas can be challenging, this strengthens democratic resilience beyond just digital issues. It also reinforces the DSA’s role – not as a standalone tool wrongly seen as “limiting free speech, ” but as part of a wider framework for protecting democracy.

Please check the PDF version for references and citiations.

Acknowledgements

This brief was written by Daniela Alvarado Rincón, Digital Democracy Policy Officer (DRI). This brief is part of the Tech and Democracy project, funded by Civitates and conducted in partnership with the European Partnership for Democracy. The sole responsibility for the content lies with the authors and the content may not necessarily reflect the position of Civitates, the Network of European Foundations, or the Partner Foundations.